UPDATED:

21st April, 2014

YOUTUBE is caching now using LUSCA and storeurl.pl method. Tested and so far working Good, only dailymotion remains now.

==========================================================================

You can also try this. (for automated installation of squid in Ubuntu).

▼

As we all know that Mikrotik web proxy is a basic proxy package , suitable for basic caching , but its not possible to do the caching of Dynamic Contents, youtube videos and many other contents. To accomplish this task you have to add SQUID proxy server , and route all http traffic from mikrotik to squid, then configure squid 2.7 stable9 with storeurl URL rewrite.

I wrote an easy guide regarding squid compilation via its source package and its configuration for caching video and other contents. It’s working good till date, and caching most of the videos including youtube, and many others. I have listed few web sites that are caching good.

Usually, this sort of caching is possible with commercial products only, like an addon of squid name CACHEVIDEO, or hardware products, But with some R&D , hit and trials, & some working configs examples, the caching worked. Please be aware that i have not reinvented the wheel, the method is out there since few years, but with some modifications and updates, its now working very good. I am still working on it to improve it. This config have few junk entries that are outdated or not required any more. You should do some research on it, like few directives on refresh patterns that are not supported in 2.7

This guide is actually its a collection of squid and storeurl configuration guides, picked up from multiple public and shared resources.Its not 100% perfect, but it does it job at some acceptable level :), and above all, ITS FREE 😉 and we all love Free items 😀 don’t we?

/ zaib

Blow is a quick reference guide for Squid 2.7 stable9 installation on Ubuntu ver 10.4 (or 12) with youtube & few other contents caching support. (or any linux flavor with Squid 2.7, because storeurl method is supported in squid 2.7 only ).

.

Following web sites are tested and working good 🙂

- General web sites for downloads → like filehippo & many others

- APPLE application downloads → like .ipsw or http://www.felixbruns.de/iPod/firmware/

- http://www.youtube.com → Videos Tested

- http://www.tune.pk → Videos Tested [most of videos comes from youtube]

- http://www.apniisp.com → Audio & Videos Tested

- http://www.facebook.com → Videos Tested [https not supported ***]

- http://www.vimeo.com → Videos Tested

- www.blip.tv → Videos Tested

- http://www.aol.com → Videos Tested

- http://www.msn.com → Videos Tested

- http://www.dailymotion.com → Partial Working

- http://www.metacafe.com → Videos Tested

- some pron sites 😦 → please block all porn sites Using open dns or other mechanism

- http://www.tube?.com → Videos Tested

- http://www.youjiz?.com/ → Videos Tested

- http://www.?videos.com → Videos Tested

- http://www.pr0nhu?.com → Videos Tested

- and many others

.

If this method helps you, please post your comment.

.

Ok here we start . . .

Lets start.

First update UBUNTU and install some support tools for squid compilation by

apt-get update apt-get install -y gcc build-essential sharutils ccze libzip-dev automake1.9

.

Now we have to download and compile SQUID 2.7 STABLE9

mkdir /temp cd /temp wget https://mikrotik-squid.googlecode.com/files/squid-2.7.STABLE9%2Bpatch.tar.gz tar xvf squid-2.7.STABLE9+patch.tar.gz cd squid-2.7.STABLE9

.

Now we have to compile SQUID , You can add/remove your required configure options.

./configure --prefix=/usr --exec_prefix=/usr --bindir=/usr/sbin --sbindir=/usr/sbin --libexecdir=/usr/lib/squid --sysconfdir=/etc/squid \ --localstatedir=/var/spool/squid --datadir=/usr/share/squid --enable-async-io=24 --with-aufs-threads=24 --with-pthreads --enable-storeio=aufs \ --enable-linux-netfilter --enable-arp-acl --enable-epoll --enable-removal-policies=heap,lru --with-aio --with-dl --enable-snmp \ --enable-delay-pools --enable-htcp --enable-cache-digests --disable-unlinkd --enable-large-cache-files --with-large-files \ --enable-err-languages=English --enable-default-err-language=English --with-maxfd=65536

Or if you want 64bit, try below

./configure \ --prefix=/usr \ --exec_prefix=/usr \ --bindir=/usr/sbin \ --sbindir=/usr/sbin \ --libexecdir=/usr/lib/squid \ --sysconfdir=/etc/squid \ --localstatedir=/var/spool/squid \ --datadir=/usr/share/squid \ --enable-async-io=24 \ --with-aufs-threads=24 \ --with-pthreads \ --enable-storeio=aufs \ --enable-linux-netfilter \ --enable-arp-acl \ --enable-epoll \ --enable-removal-policies=heap,lru \ --with-aio --with-dl \ --enable-snmp \ --enable-delay-pools \ --enable-htcp \ --enable-cache-digests \ --disable-unlinkd \ --enable-large-cache-files \ --with-large-files \ --enable-err-languages=English \ --enable-default-err-language=English --with-maxfd=65536 \ --enable-carp \ --enable-follow-x-forwarded-for \ --with-maxfd=65536 \ 'amd64-debian-linux' 'build_alias=amd64-debian-linux' 'host_alias=amd64-debian-linux' 'target_alias=amd64-debian-linux' 'CFLAGS=-Wall -g -O2' 'LDFLAGS=-Wl,-Bsymbolic-functions' 'CPPFLAGS='

Now issue make and make install commands

[To understand what configure, make and make install does, read following,

http://www.codecoffee.com/tipsforlinux/articles/27.html]

make make install

Create Log folders (if not exists) and assign write permissions to proxy user

mkdir /var/log/squid chown proxy:proxy /var/log/squid

↓

Now its time to Edit squid configuration files. open Squid Configuration file by

nano /etc/squid/squid.conf

.

Remove all previous lines , means empty the file, and paste all following lines . . .

# Last Updated : 09th FEBRAURY, 2014 / Syed Jahanzaib

# SQUID 2.7 Stable9 Configuration FILE with updated STOREURL.PL [jz]

# Tested with Ubuntu 10.4 & 12.4 with compiled version of Squid 2.7 STABLE.9 [jz]

# Various contents copied from multiple public shared sources, personnel configs, hits and trial, VC etc

# It do have lot of junk / un-necessary entries, so remove them if not required.

# Syed Jahanzaib / https://aacable.wordpress.com

# Email: aacable@hotamil.com

# PORT and Transparent Option [jz]

http_port 8080 transparent

server_http11 on

# PID File location, we can use it for various functions later, like for squid status (JZ)

pid_filename /var/run/squid.pid

# Cache Directory , modify it according to your system. [jz]

# but first create directory in root by mkdir /cache1

# and then issue this command chown proxy:proxy /cache1

# [for ubuntu user is proxy, in Fedora user is SQUID]

# I have set 200 GB for caching reserved just for caching ,

# adjust it according to your need.

# My recommendation is to have one cache_dir per drive. /zaib

# Using 10 GB in this example per drive

store_dir_select_algorithm round-robin

cache_dir aufs /cache-1 10240 16 256

# Cache Replacement Policies [jz]

cache_replacement_policy heap GDSF

memory_replacement_policy heap GDSF

# If you want to enable DATE time n SQUID Logs,use following [jz]

emulate_httpd_log on

logformat squid %tl %6tr %>a %Ss/%03Hs %<st %rm %ru %un %Sh/%<A %mt

log_fqdn off

# How much days to keep users access web logs [jz]

# You need to rotate your log files with a cron job. For example:

# 0 0 * * * /usr/local/squid/bin/squid -k rotate

logfile_rotate 14

debug_options ALL,1

# Squid Logs Section

# access_log none # To disable Squid access log, enable this option

cache_access_log /var/log/squid/access.log

cache_log /var/log/squid/cache.log

#referer_log /var/log/squid/referer.log

cache_store_log /var/log/squid/store.log

#mime_table /etc/squid/mime.conf

log_mime_hdrs off

# I used DNSAMSQ service for fast dns resolving

# so install by using "apt-get install dnsmasq" first / zaib

dns_nameservers 8.8.8.8

ftp_user anonymous@

ftp_list_width 32

ftp_passive on

ftp_sanitycheck on

#ACL Section

acl all src 0.0.0.0/0.0.0.0 # Allow All, you may want to change this to allow your ip series only

acl localhost src 127.0.0.1/255.255.255.255

acl to_localhost dst 127.0.0.0/8

###### cache manager section start, You can remote it if not required ####

# install following

# apt-get install squid-cgi

# add following entry in /etc/squid/cachemgr.conf

# localhost:8080

# then you can access it via http://squid_ip/cgi-bin/cachemgr.cgi

acl manager url_regex -i ^cache_object:// /squid-internal-mgr/

acl managerAdmin src 10.0.0.1 # Change it to your management pc ip

cache_mgr zaib@zaib.com

cachemgr_passwd zaib all

http_access allow manager localhost

http_access allow manager managerAdmin

http_access deny manager

#http_access allow localhost

####### CACHGEMGR END #########

acl SSL_ports port 443 563 # https, snews

acl SSL_ports port 873 # rsync

acl Safe_ports port 80 # http

acl Safe_ports port 21 # ftp

acl Safe_ports port 53 # dns

acl Safe_ports port 443 563 # https, snews

acl Safe_ports port 70 # gopher

acl Safe_ports port 210 # wais

acl Safe_ports port 1025-65535 # unregistered ports

acl Safe_ports port 280 # http-mgmt

acl Safe_ports port 488 # gss-http

acl Safe_ports port 591 # filemaker

acl Safe_ports port 777 # multiling http

acl Safe_ports port 631 # cups

acl Safe_ports port 873 # rsync

acl Safe_ports port 901 # SWAT

acl purge method PURGE

acl CONNECT method CONNECT

http_access allow purge localhost

http_access deny purge

http_access deny !Safe_ports

http_access deny CONNECT !SSL_ports

http_access allow localhost

http_access allow all

http_reply_access allow all

icp_access allow all

#===============================

# Administrative Parameters [jz]

#===============================

# I used UBUNTU so user is proxy, in FEDORA you may use use squid [jz]

cache_effective_user proxy

cache_effective_group proxy

cache_mgr SYED_JAHANZAIB

visible_hostname aacable@hotmai.com

unique_hostname aacable@hotmai.com

#=================

# ACCELERATOR [jz]

#=================

half_closed_clients off

quick_abort_min 0 KB

quick_abort_max 0 KB

vary_ignore_expire on

reload_into_ims on

log_fqdn off

memory_pools off

cache_swap_low 90

cache_swap_high 95

max_filedescriptors 65536

fqdncache_size 16384

retry_on_error on

offline_mode off

pipeline_prefetch on

check_hostnames off

client_db on

#range_offset_limit 128 KB

max_stale 1 week

read_ahead_gap 1 KB

forwarded_for off

minimum_expiry_time 1960 seconds

collapsed_forwarding on

cache_vary on

update_headers off

vary_ignore_expire on

incoming_rate 9

ignore_ims_on_miss off

# If you want to hide your proxy machine from being detected at various site use following [jz]

via off

#==========================

# Squid Memory Tunning [jz]

#==========================

# If you have 4GB memory in Squid box, we will use formula of 1/3

# You can adjust it according to your need. IF squid is taking too much of RAM

# Then decrease it to 512 MB or even less.

cache_mem 1024 MB

minimum_object_size 0 bytes

maximum_object_size 1 GB

# Lower it down if your squid taking to much memory, e.g: 512 KB or even less

maximum_object_size_in_memory 512 KB

#============================================================$

# SNMP , if you want to generate graphs for SQUID via MRTG [jz]

#============================================================$

#acl snmppublic snmp_community gl

#snmp_port 3401

#snmp_access allow snmppublic all

#snmp_access allow all

#===========================================================================

# ZPH (for 2.7) , To enable cache content to be delivered at full lan speed,

# OR To bypass the queue at MT for cached contents / zaib

#===========================================================================

tcp_outgoing_tos 0x30 all

zph_mode tos

zph_local 0x30

zph_parent 0

zph_option 136

# ++++++++++++++++++++++++++++++++++++++++++++++++

# +++++++++++ REFRESH PATTERNS SECTION +++++++++++

# ++++++++++++++++++++++++++++++++++++++++++++++++

#===================================

# youtube Caching Configuration

#===================================

strip_query_terms off

acl yutub url_regex -i .*youtube\.com\/.*$

acl yutub url_regex -i .*youtu\.be\/.*$

logformat squid1 %{Referer}>h %ru

access_log /var/log/squid/yt.log squid1 yutub

acl redirec urlpath_regex -i .*&redirect_counter=1&cms_redirect=yes

acl redirec urlpath_regex -i .*&ir=1&rr=12

acl reddeny url_regex -i c\.youtube\.com\/videoplayback.*redirect_counter=1.*$

acl reddeny url_regex -i c\.youtube\.com\/videoplayback.*cms_redirect=yes.*$

acl reddeny url_regex -i c\.youtube\.com\/videoplayback.*\&ir=1.*$

acl reddeny url_regex -i c\.youtube\.com\/videoplayback.*\&rr=12.*$

storeurl_access deny reddeny

#--------------------------------------------------------#

# REFRESH PATTERN UPDATED: 27th September, 2013

#--------------------------------------------------------#

refresh_pattern ^http\:\/\/*\.facebook\.com\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/*\.kaskus\.us\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/*\.google\.co\*.*/ 10080 90% 43200 reload-into-ims

refresh_pattern ^http\:\/\/*\.yahoo\.co*\.*/ 10080 90% 43200 reload-into-ims

refresh_pattern ^http\:\/\/.*\.windowsupdate\.microsoft\.com\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/office\.microsoft\.com\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/windowsupdate\.microsoft\.com\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/w?xpsp[0-9]\.microsoft\.com\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/w2ksp[0-9]\.microsoft\.com\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/download\.microsoft\.com\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/download\.macromedia\.com\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^ftp\:\/\/ftp\.nai\.com/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/ftp\.software\.ibm\.com\/ 10080 80% 43200 reload-into-ims

refresh_pattern ^http\:\/\/*\.google\.co\*.*/ 10080 90% 43200 reload-into-ims

refresh_pattern ^http\:\/\/*\.yahoo\.co*\.*/ 10080 90% 43200 reload-into-ims

refresh_pattern ^http://*.apps.facebook.*/.* 720 80% 4320

refresh_pattern ^http://*.profile.ak.fbcdn.net/.* 720 80% 4320

refresh_pattern ^http://*.creative.ak.fbcdn.net/.* 720 80% 4320

refresh_pattern ^http://*.static.ak.fbcdn.net/.* 720 80% 4320

refresh_pattern ^http://*.facebook.poker.zynga.com/.* 720 80% 4320

refresh_pattern ^http://*.statics.poker.static.zynga.com/.* 720 80% 4320

refresh_pattern ^http://*.zynga.*/.* 720 80% 4320

refresh_pattern ^http://*.texas_holdem.*/.* 720 80% 4320

refresh_pattern ^http://*.google.*/.* 720 80% 4320

refresh_pattern ^http://*.indowebster.*/.* 720 80% 4320

refresh_pattern ^http://*.4shared.*/.* 720 80% 4320

refresh_pattern ^http://*.yahoo.com/.* 720 80% 4320

refresh_pattern ^http://*.yimg.*/.* 720 80% 4320

refresh_pattern ^http://*.boleh.*/.* 720 80% 4320

refresh_pattern ^http://*.kompas.*/.* 180 80% 4320

refresh_pattern ^http://*.google-analytics.*/.* 720 80% 4320

refresh_pattern ^http://(.*?)/get_video\? 10080 90% 999999 override-expire ignore-no-cache ignore-private

refresh_pattern ^http://(.*?)/videoplayback\? 10080 90% 999999 override-expire ignore-no-cache ignore-private

refresh_pattern -i (get_video\?|videoplayback\?id|videoplayback.*id) 161280 50000% 525948 override-expire ignore-reload

# compressed

refresh_pattern -i \.gz$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.cab$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.bzip2$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.bz2$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.gz2$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.tgz$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.tar.gz$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.zip$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.rar$ 1008000 90% 99999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.tar$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.ace$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.7z$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

# documents

refresh_pattern -i \.xls$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.doc$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.xlsx$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.docx$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.pdf$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.ppt$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.pptx$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.rtf\?$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

# multimedia

refresh_pattern -i \.mid$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.wav$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.viv$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.mpg$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.mov$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.avi$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.asf$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.qt$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.rm$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.rmvb$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.mpeg$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.wmp$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.3gp$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.mp3$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.mp4$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

# web content

refresh_pattern -i \.js$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.psf$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.html$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.htm$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.css$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.swf$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.js\?$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.css\?$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.xml$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

# images

refresh_pattern -i \.gif$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.jpg$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.png$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.jpeg$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.bmp$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.psd$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.ad$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.gif\?$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.jpg\?$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.png\?$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.jpeg\?$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.psd\?$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

# application

refresh_pattern -i \.deb$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.rpm$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.msi$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.exe$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.dmg$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

# misc

refresh_pattern -i \.dat$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.qtm$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

# itunes

refresh_pattern -i \.m4p$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

refresh_pattern -i \.mpa$ 10080 90% 999999 override-expire override-lastmod reload-into-ims ignore-reload

# JUNK : O ~

refresh_pattern ^ftp: 1440 20% 10080

refresh_pattern -i \.(avi|wav|mid|mp?|mpeg|mov|3gp|wm?|swf|flv|x-flv|css|js|axd)$ 10080 95% 302400 override-expire override-lastmod reload-into-ims ignore-reload ignore-no-cache ignore-private ignore-auth

refresh_pattern -i \.(gif|png|jp?g|ico|bmp)$ 4320 95% 10080 override-expire override-lastmod reload-into-ims ignore-reload ignore-no-cache ignore-private ignore-auth

refresh_pattern -i \.(rpm|cab|exe|msi|msu|zip|tar|gz|tgz|rar|bin|7z|doc|xls|ppt|pdf)$ 4320 90% 10080 override-expire override-lastmod reload-into-ims

refresh_pattern -i (/cgi-bin/|\?) 0 0% 0

refresh_pattern . 360 90% 302400 override-lastmod reload-into-ims

########################################################################

## MORE REFRESH PATTERN SETTINGS (including video cache config too)

########################################################################

acl dontrewrite url_regex (get_video|video\?v=|videoplayback\?id|videoplayback.*id).*begin\=[1-9][0-9]* \.php\? \.asp\? \.aspx\? threadless.*\.jpg\?r=

acl store_rewrite_list urlpath_regex \/(get_video|videoplayback\?id|videoplayback.*id) \.(jp(e?g|e|2)|gif|png|tiff?|bmp|ico|flv|wmv|3gp|mp(4|3)|exe|msi|zip|on2|mar|swf)\?

acl store_rewrite_list urlpath_regex \/(get_video\?|videodownload\?|videoplayback.*id|watch\?)

acl store_rewrite_list urlpath_regex \.(3gp|mp(3|4)|flv|(m|f)4v|on2|fid|avi|mov|wm(a|v)|(mp(e?g|a|e|1|2))|mk(a|v)|jp(e?g|e|2)|gif|png|tiff?|bmp|tga|svg|ico|swf|exe|ms(i|u|p)|cab|psf|mar|bin|z(ip|[0-9]{2})|r(ar|[0-9]{2})|7z)\?

acl store_rewrite_list_domain url_regex ^http:\/\/([a-zA-Z-]+[0-9-]+)\.[A-Za-z]*\.[A-Za-z]*

acl store_rewrite_list_domain url_regex (([a-z]{1,2}[0-9]{1,3})|([0-9]{1,3}[a-z]{1,2}))\.[a-z]*[0-9]?\.[a-z]{3}

acl store_rewrite_list_path urlpath_regex \.(jp(e?g|e|2)|gif|png|tiff?|bmp|ico|flv|avc|zip|mp3|3gp|rar|on2|mar|exe)$

acl store_rewrite_list_domain_CDN url_regex streamate.doublepimp.com.*\.js\? .fbcdn.net \.rapidshare\.com.*\/[0-9]*\/.*\/[^\/]* ^http:\/\/(www\.ziddu\.com.*\.[^\/]{3,4})\/(.*) \.doubleclick\.net.* yield$

acl store_rewrite_list_domain_CDN url_regex (cbk|mt|khm|mlt|tbn)[0-9]?.google\.co(m|\.uk|\.id)

acl store_rewrite_list_domain_CDN url_regex ^http://(.*?)/windowsupdate\?

acl store_rewrite_list_domain_CDN url_regex photos-[a-z].ak.fbcdn.net

acl store_rewrite_list_domain_CDN url_regex ^http:\/\/([a-z])[0-9]?(\.gstatic\.com|\.wikimapia\.org)

acl store_rewrite_list_domain_CDN url_regex ^http:\/\/download[0-9]{3}.avast.com/iavs5x/

acl store_rewrite_list_domain_CDN url_regex ^http:\/\/dnl-[0-9]{2}.geo.kaspersky.com

acl store_rewrite_list_domain_CDN url_regex ^http:\/\/[1-4].bp.blogspot.com

acl store_rewrite_list_domain url_regex ^http:\/\/([a-zA-Z-]+[0-9-]+)\.[A-Za-z]*\.[A-Za-z]*

acl store_rewrite_list_domain url_regex (([a-z]{1,2}[0-9]{1,3})|([0-9]{1,3}[a-z]{1,2}))\.[a-z]*[0-9]?\.[a-z]{3}

acl store_rewrite_list_path urlpath_regex \.fid\?.*\&start= \.(jp(e?g|e|2)|gif|png|tiff?|bmp|ico|psf|flv|avc|zip|mp3|3gp|rar|on2|mar|exe)$

acl store_rewrite_list_domain_CDN url_regex \.rapidshare\.com.*\/[0-9]*\/.*\/[^\/]* ^http:\/\/(www\.ziddu\.com.*\.[^\/]{3,4})\/(.*) \.doubleclick\.net.*

acl store_rewrite_list_domain_CDN url_regex ^http:\/\/[.a-z0-9]*\.photobucket\.com.*\.[a-z]{3}$ quantserve\.com

acl store_rewrite_list_domain_CDN url_regex ^http:\/\/[a-z]+[0-9]\.google\.co(m|\.id)

acl store_rewrite_list_domain_CDN url_regex ^http:\/\/\.www[0-9][0-9]\.indowebster\.com\/(.*)(rar|zip|flv|wm(a|v)|3gp|psf|mp(4|3)|exe|msi|avi|(mp(e?g|a|e|1|2|3|4))|cab|exe)

# Videos Config / jz

acl videocache_allow_url url_regex -i \.googlevideo\.com\/videoplayback \.googlevideo\.com\/videoplay \.googlevideo\.com\/get_video\?

acl videocache_allow_url url_regex -i \.google\.com\/videoplayback \.google\.com\/videoplay \.google\.com\/get_video\?

acl videocache_allow_url url_regex -i \.google\.[a-z][a-z]\/videoplayback \.google\.[a-z][a-z]\/videoplay \.google\.[a-z][a-z]\/get_video\?

acl videocache_allow_url url_regex -i proxy[a-z0-9\-][a-z0-9][a-z0-9][a-z0-9]?\.dailymotion\.com\/

acl videocache_allow_url url_regex -i \.vimeo\.com\/(.*)\.(flv|mp4)

acl videocache_allow_url url_regex -i va\.wrzuta\.pl\/wa[0-9][0-9][0-9][0-9]?

acl videocache_allow_url url_regex -i \.youporn\.com\/(.*)\.flv

acl videocache_allow_url url_regex -i \.msn\.com\.edgesuite\.net\/(.*)\.flv

acl videocache_allow_url url_regex -i \.tube8\.com\/(.*)\.(flv|3gp)

acl videocache_allow_url url_regex -i \.mais\.uol\.com\.br\/(.*)\.flv

acl videocache_allow_url url_regex -i \.blip\.tv\/(.*)\.(flv|avi|mov|mp3|m4v|mp4|wmv|rm|ram|m4v)

acl videocache_allow_url url_regex -i \.apniisp\.com\/(.*)\.(flv|avi|mov|mp3|m4v|mp4|wmv|rm|ram|m4v)

acl videocache_allow_url url_regex -i \.break\.com\/(.*)\.(flv|mp4)

acl videocache_allow_url url_regex -i redtube\.com\/(.*)\.flv

acl videocache_allow_url url_regex -i vid\.akm\.dailymotion\.com\/

acl videocache_allow_url url_regex -i [a-z0-9][0-9a-z][0-9a-z]?[0-9a-z]?[0-9a-z]?\.xtube\.com\/(.*)flv

acl videocache_allow_url url_regex -i bitcast\.vimeo\.com\/vimeo\/videos\/

acl videocache_allow_url url_regex -i va\.wrzuta\.pl\/wa[0-9][0-9][0-9][0-9]?

acl videocache_allow_url url_regex -i \.files\.youporn\.com\/(.*)\/flv\/

acl videocache_allow_url url_regex -i \.msn\.com\.edgesuite\.net\/(.*)\.flv

acl videocache_allow_url url_regex -i media[a-z0-9]?[a-z0-9]?[a-z0-9]?\.tube8\.com\/ mobile[a-z0-9]?[a-z0-9]?[a-z0-9]?\.tube8\.com\/ www\.tube8\.com\/(.*)\/

acl videocache_allow_url url_regex -i \.mais\.uol\.com\.br\/(.*)\.flv

acl videocache_allow_url url_regex -i \.video[a-z0-9]?[a-z0-9]?\.blip\.tv\/(.*)\.(flv|avi|mov|mp3|m4v|mp4|wmv|rm|ram)

acl videocache_allow_url url_regex -i video\.break\.com\/(.*)\.(flv|mp4)

acl videocache_allow_url url_regex -i \.xvideos\.com\/videos\/flv\/(.*)\/(.*)\.(flv|mp4)

acl videocache_allow_url url_regex -i stream\.aol\.com\/(.*)/[a-zA-Z0-9]+\/(.*)\.(flv|mp4)

acl videocache_allow_url url_regex -i videos\.5min\.com\/(.*)/[0-9_]+\.(mp4|flv)

acl videocache_allow_url url_regex -i msn\.com\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i msn\.(.*)\.(com|net)\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i msnbc\.(.*)\.(com|net)\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i \.blip\.tv\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_deny_url url_regex -i \.blip\.tv\/(.*)filename

acl videocache_allow_url url_regex -i \.break\.com\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i cdn\.turner\.com\/(.*)/(.*)\.(flv)

acl videocache_allow_url url_regex -i \.dailymotion\.com\/video\/[a-z0-9]{5,9}_?(.*)

acl videocache_allow_url url_regex -i proxy[a-z0-9\-]?[a-z0-9]?[a-z0-9]?[a-z0-9]?\.dailymotion\.com\/(.*)\.(flv|on2|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i vid\.akm\.dailymotion\.com\/(.*)\.(flv|on2|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i vid\.ec\.dmcdn\.net\/(.*)\.(flv|on2|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i video\.(.*)\.fbcdn\.net\/(.*)/[0-9_]+\.(mp4|flv|avi|mkv|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i \.mccont\.com\/ItemFiles\/(.*)?\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i (.*)\.myspacecdn\.com\/(.*)\/[a-zA-Z0-9]+\/vid\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i (.*)\.myspacecdn\.(.*)\.footprint\.net\/(.*)\/[a-zA-Z0-9]+\/vid\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i \.vimeo\.com\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i \.amazonaws\.com\/(.*)\.vimeo\.com(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i v\.imwx\.com\/v\/wxcom\/[a-zA-Z0-9]+\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)\?(.*)videoId=[0-9]+&

acl videocache_allow_url url_regex -i c\.wrzuta\.pl\/wv[0-9]+\/[a-z0-9]+/[0-9]+/

acl videocache_allow_url url_regex -i c\.wrzuta\.pl\/wa[0-9]+\/[a-z0-9]+

acl videocache_allow_url url_regex -i cdn[a-z0-9]?[a-z0-9]?[a-z0-9]?\.public\.extremetube\.phncdn\.com\/(.*)\/[a-zA-Z0-9_-]+\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i vs[a-z0-9]?[a-z0-9]?[a-z0-9]?\.hardsextube\.com\/(.*)\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_deny_url url_regex -i \.hardsextube\.com\/videothumbs

acl videocache_allow_url url_regex -i cdn[a-z0-9]?[a-z0-9]?[a-z0-9]?\.public\.keezmovies\.phncdn\.com\/(.*)\/[0-9a-zA-Z_\-]+\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i cdn[a-z0-9]?[a-z0-9]?[a-z0-9]?\.public\.keezmovies\.com\/(.*)\/[0-9a-zA-Z_\-]+\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i nyc-v[a-z0-9]?[a-z0-9]?[a-z0-9]?\.pornhub\.com\/(.*)/videos/[0-9]{3}/[0-9]{3}/[0-9]{3}/[0-9]+\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i \.video\.pornhub\.phncdn\.com\/videos/(.*)/[0-9]+\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i video(.*)\.redtubefiles\.com\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i \.slutload-media\.com\/(.*)\/[a-zA-Z0-9_.-]+\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i cdn[a-z0-9]?[a-z0-9]?[a-z0-9]?\.public\.spankwire\.com\/(.*)\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i cdn[a-z0-9]?[a-z0-9]?[a-z0-9]?\.public\.spankwire\.phncdn\.com\/(.*)\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i \.tube8\.com\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_url url_regex -i \.xtube\.com\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_deny_url url_regex -i \.xtube\.com\/(.*)(Thumb|videowall)

acl videocache_allow_url url_regex -i \.xvideos\.com\/videos\/flv\/(.*)\/(.*)\.(flv|mp4)

acl videocache_allow_url url_regex -i \.public\.youporn\.phncdn\.com\/(.*)\/[a-zA-Z0-9_-]+\/(.*)\.(flv|mp4|avi|mkv|mp3|rm|rmvb|m4v|mov|wmv|3gp|mpg|mpeg)

acl videocache_allow_dom dstdomain .mccont.com .metacafe.com .redtube.com .dailymotion.com .fbcdn.net

acl videocache_deny_dom dstdomain .download.youporn.com .static.blip.tv

acl dontrewrite url_regex redbot\.org (get_video|videoplayback\?id|videoplayback.*id).*begin\=[1-9][0-9]*

acl getmethod method GET

storeurl_access deny !getmethod

storeurl_access deny dontrewrite

storeurl_access allow videocache_allow_url

storeurl_access allow videocache_allow_dom

storeurl_access allow store_rewrite_list_domain_CDN

storeurl_access allow store_rewrite_list

storeurl_access allow store_rewrite_list_domain store_rewrite_list_path

storeurl_access deny all

# Load SOTREURL.PL REWRITE PROGRAM

storeurl_rewrite_program /etc/squid/storeurl.pl

storeurl_rewrite_children 15

storeurl_rewrite_concurrency 999

acl store_rewrite_list urlpath_regex -i \/(get_video\?|videodownload\?|videoplayback.*id)

acl store_rewrite_list urlpath_regex -i \.flv$ \.mp3$ \.mov$ \.mp4$ \.swf$ \

storeurl_access allow store_rewrite_list

storeurl_access deny all

Now save squid.conf & Exit.

>

>

>

STOREURL.PL

Now create storeurl.pl which will be used to pull youtube video from thecache.

touch /etc/squid/storeurl.pl chmod +x /etc/squid/storeurl.pl

Now edit this file

nano /etc/squid/storeurl.pl

and paste the following contents.

#!/usr/bin/perl

# This script is NOT written or modified by me, I only copy pasted it from the internet.

# It was First originally Written by chudy_fernandez@yahoo.com

# & Have been modified by various persons over the net to fix/add various functions.

# Like For Example modified by member of comstuff.net to satisfy common and dynamic content.

# th30nly @comstuff.net a.k.a invisible_theater , Syaifudin JW , Ucok Karnadi and possibly other people too.

# For more info, http://wiki.squid-cache.org/ConfigExamples/DynamicContent/YouTube

# Syed Jahanzaib / aacable@hotmail.com

# https://aacable.wordpress.com/2012/01/19/youtube-caching-with-squid-2-7-using-storeurl-pl/

#######################

# Special thanks to some indonesian friends who provided some updates,

## UPDATED on 20 January, 2014 / Syed Jahanzaib

#####################

#### REFERENCES ##### http://www2.fh-lausitz.de/launic/comp/misc/squid/projekt_youtube/

#####################

#####################

use IO::File;

$|=1;

STDOUT->autoflush(1);

$debug=1; ## recommended:0

$bypassallrules=0; ## recommended:0

$sucks=""; ## unused

$sucks="sucks" if ($debug>=1);

$timenow="";

$printtimenow=1; ## print timenow: 0|1

my $logfile = '/tmp/storeurl.log';

open my $logfh, '>>', $logfile

or die "Couldn't open $logfile for appending: $!\n" if $debug;

$logfh->autoflush(1) if $debug;

#### main

while (<>) {

$timenow=time()." " if ($printtimenow);

print $logfh "$timenow"."in : $_" if ($debug>=1);

chop; ## strip eol

@X = split;

$x = $X[0]; ## 0

$u = $X[1]; ## url

$_ = $u; ## url

if ($bypassallrules){

$out="$u"; ## map 1:1

#youtube with range (YOUTUBE has split its videos into segments)

}elsif (m/(youtube|google).*videoplayback\?.*range/ ){

@itag = m/[&?](itag=[0-9]*)/;

@id = m/[&?](id=[^\&]*)/;

@range = m/[&?](range=[^\&\s]*)/;

@begin = m/[&?](begin=[^\&\s]*)/;

@redirect = m/[&?](redirect_counter=[^\&]*)/;

$out="http://video-srv.youtube.com.SQUIDINTERNAL/@itag&@id&@range&@redirect";

#sleep(1); ## delay loop

#youtube without range

}elsif (m/(youtube|google).*videoplayback\?/ ){

@itag = m/[&?](itag=[0-9]*)/;

@id = m/[&?](id=[^\&]*)/;

@redirect = m/[&?](redirect_counter=[^\&]*)/;

$out="http://video-srv.youtube.com.SQUIDINTERNAL/@itag&@id&@redirect";

#sleep(1); ## delay loop

#speedtest

}elsif (m/^http:\/\/(.*)\/speedtest\/(.*\.(jpg|txt))\?(.*)/) {

$out="http://www.speedtest.net.SQUIDINTERNAL/speedtest/" . $2 . "";

#mediafire

}elsif (m/^http:\/\/199\.91\.15\d\.\d*\/\w{12}\/(\w*)\/(.*)/) {

$out="http://www.mediafire.com.SQUIDINTERNAL/" . $1 ."/" . $2 . "";

#fileserve

}elsif (m/^http:\/\/fs\w*\.fileserve\.com\/file\/(\w*)\/[\w-]*\.\/(.*)/) {

$out="http://www.fileserve.com.SQUIDINTERNAL/" . $1 . "./" . $2 . "";

#filesonic

}elsif (m/^http:\/\/s[0-9]*\.filesonic\.com\/download\/([0-9]*)\/(.*)/) {

$out="http://www.filesonic.com.SQUIDINTERNAL/" . $1 . "";

#4shared

}elsif (m/^http:\/\/[a-zA-Z]{2}\d*\.4shared\.com(:8080|)\/download\/(.*)\/(.*\..*)\?.*/) {

$out="http://www.4shared.com.SQUIDINTERNAL/download/$2\/$3";

#4shared preview

}elsif (m/^http:\/\/[a-zA-Z]{2}\d*\.4shared\.com(:8080|)\/img\/(\d*)\/\w*\/dlink__2Fdownload_2F(\w*)_3Ftsid_3D[\w-]*\/preview\.mp3\?sId=\w*/) {

$out="http://www.4shared.com.SQUIDINTERNAL/$2";

#photos-X.ak.fbcdn.net where X a-z

}elsif (m/^http:\/\/photos-[a-z](\.ak\.fbcdn\.net)(\/.*\/)(.*\.jpg)/) {

$out="http://photos" . $1 . "/" . $2 . $3 . "";

#YX.sphotos.ak.fbcdn.net where X 1-9, Y a-z

} elsif (m/^http:\/\/[a-z][0-9]\.sphotos\.ak\.fbcdn\.net\/(.*)\/(.*)/) {

$out="http://photos.ak.fbcdn.net/" . $1 ."/". $2 . "";

#maps.google.com

} elsif (m/^http:\/\/(cbk|mt|khm|mlt|tbn)[0-9]?(.google\.co(m|\.uk|\.id).*)/) {

$out="http://" . $1 . $2 . "";

# compatibility for old cached get_video?video_id

} elsif (m/^http:\/\/([0-9.]{4}|.*\.youtube\.com|.*\.googlevideo\.com|.*\.video\.google\.com).*?(videoplayback\?id=.*?|video_id=.*?)\&(.*?)/) {

$z = $2; $z =~ s/video_id=/get_video?video_id=/;

$out="http://video-srv.youtube.com.SQUIDINTERNAL/" . $z . "";

#sleep(1); ## delay loop

} elsif (m/^http:\/\/www\.google-analytics\.com\/__utm\.gif\?.*/) {

$out="http://www.google-analytics.com/__utm.gif";

#Cache High Latency Ads

} elsif (m/^http:\/\/([a-z0-9.]*)(\.doubleclick\.net|\.quantserve\.com|\.googlesyndication\.com|yieldmanager|cpxinteractive)(.*)/) {

$y = $3;$z = $2;

for ($y) {

s/pixel;.*/pixel/;

s/activity;.*/activity/;

s/(imgad[^&]*).*/\1/;

s/;ord=[?0-9]*//;

s/;×tamp=[0-9]*//;

s/[&?]correlator=[0-9]*//;

s/&cookie=[^&]*//;

s/&ga_hid=[^&]*//;

s/&ga_vid=[^&]*//;

s/&ga_sid=[^&]*//;

# s/&prev_slotnames=[^&]*//

# s/&u_his=[^&]*//;

s/&dt=[^&]*//;

s/&dtd=[^&]*//;

s/&lmt=[^&]*//;

s/(&alternate_ad_url=http%3A%2F%2F[^(%2F)]*)[^&]*/\1/;

s/(&url=http%3A%2F%2F[^(%2F)]*)[^&]*/\1/;

s/(&ref=http%3A%2F%2F[^(%2F)]*)[^&]*/\1/;

s/(&cookie=http%3A%2F%2F[^(%2F)]*)[^&]*/\1/;

s/[;&?]ord=[?0-9]*//;

s/[;&]mpvid=[^&;]*//;

s/&xpc=[^&]*//;

# yieldmanager

s/\?clickTag=[^&]*//;

s/&u=[^&]*//;

s/&slotname=[^&]*//;

s/&page_slots=[^&]*//;

}

$out="http://" . $1 . $2 . $y . "";

#cache high latency ads

} elsif (m/^http:\/\/(.*?)\/(ads)\?(.*?)/) {

$out="http://" . $1 . "/" . $2 . "";

# spicific servers starts here....

} elsif (m/^http:\/\/(www\.ziddu\.com.*\.[^\/]{3,4})\/(.*?)/) {

$out="http://" . $1 . "";

#cdn, varialble 1st path

} elsif (($u =~ /filehippo/) && (m/^http:\/\/(.*?)\.(.*?)\/(.*?)\/(.*)\.([a-z0-9]{3,4})(\?.*)?/)) {

@y = ($1,$2,$4,$5);

$y[0] =~ s/[a-z0-9]{2,5}/cdn./;

$out="http://" . $y[0] . $y[1] . "/" . $y[2] . "." . $y[3] . "";

#rapidshare

} elsif (($u =~ /rapidshare/) && (m/^http:\/\/(([A-Za-z]+[0-9-.]+)*?)([a-z]*\.[^\/]{3}\/[a-z]*\/[0-9]*)\/(.*?)\/([^\/\?\&]{4,})$/)) {

$out="http://cdn." . $3 . "/SQUIDINTERNAL/" . $5 . "";

} elsif (($u =~ /maxporn/) && (m/^http:\/\/([^\/]*?)\/(.*?)\/([^\/]*?)(\?.*)?$/)) {

$out="http://" . $1 . "/SQUIDINTERNAL/" . $3 . "";

#like porn hub variables url and center part of the path, filename etention 3 or 4 with or without ? at the end

} elsif (($u =~ /tube8|pornhub|xvideos/) && (m/^http:\/\/(([A-Za-z]+[0-9-.]+)*?(\.[a-z]*)?)\.([a-z]*[0-9]?\.[^\/]{3}\/[a-z]*)(.*?)((\/[a-z]*)?(\/[^\/]*){4}\.[^\/\?]{3,4})(\?.*)?$/)) {

$out="http://cdn." . $4 . $6 . "";

#...spicific servers end here.

#photos-X.ak.fbcdn.net where X a-z

} elsif (m/^http:\/\/photos-[a-z].ak.fbcdn.net\/(.*)/) {

$out="http://photos.ak.fbcdn.net/" . $1 . "";

#for yimg.com video

} elsif (m/^http:\/\/(.*yimg.com)\/\/(.*)\/([^\/\?\&]*\/[^\/\?\&]*\.[^\/\?\&]{3,4})(\?.*)?$/) {

$out="http://cdn.yimg.com//" . $3 . "";

#for yimg.com doubled

} elsif (m/^http:\/\/(.*?)\.yimg\.com\/(.*?)\.yimg\.com\/(.*?)\?(.*)/) {

$out="http://cdn.yimg.com/" . $3 . "";

#for yimg.com with &sig=

} elsif (m/^http:\/\/(.*?)\.yimg\.com\/(.*)/) {

@y = ($1,$2);

$y[0] =~ s/[a-z]+[0-9]+/cdn/;

$y[1] =~ s/&sig=.*//;

$out="http://" . $y[0] . ".yimg.com/" . $y[1] . "";

#youjizz. We use only domain and filename

} elsif (($u =~ /media[0-9]{2,5}\.youjizz/) && (m/^http:\/\/(.*)(\.[^\.\-]*?\..*?)\/(.*)\/([^\/\?\&]*)\.([^\/\?\&]{3,4})((\?|\%).*)?$/)) {

@y = ($1,$2,$4,$5);

$y[0] =~ s/(([a-zA-A]+[0-9]+(-[a-zA-Z])?$)|(.*cdn.*)|(.*cache.*))/cdn/;

$out="http://" . $y[0] . $y[1] . "/" . $y[2] . "." . $y[3] . "";

#general purpose for cdn servers. add above your specific servers.

} elsif (m/^http:\/\/([0-9.]*?)\/\/(.*?)\.(.*)\?(.*?)/) {

$out="http://squid-cdn-url//" . $2 . "." . $3 . "";

#generic http://variable.domain.com/path/filename."ex" "ext" or "exte" with or withour "? or %"

} elsif (m/^http:\/\/(.*)(\.[^\.\-]*?\..*?)\/(.*)\.([^\/\?\&]{2,4})((\?|\%).*)?$/) {

@y = ($1,$2,$3,$4);

$y[0] =~ s/(([a-zA-Z]+[0-9]+(-[a-zA-Z])?$)|(.*cdn.*)|(.*cache.*))/cdn/;

$out="http://" . $y[0] . $y[1] . "/" . $y[2] . "." . $y[3] . "";

} else {

$out="$u"; ##$X[2]="$sucks";

}

print $logfh "$timenow"."out: $x $out $X[2] $X[3] $X[4] $X[5] $X[6] $X[7]\n" if ($debug>=1);

print "$x $out $X[2] $X[3] $X[4] $X[5] $X[6] $X[7]\n";

}

close $logfh if ($debug);

Save & Exit.

Now create cache dir and assign proper permission to proxy user

>

mkdir /cache-1 chown proxy:proxy /cache-1 chmod -R 777 /cache-1

.

Now initialize squid cache directories by

squid -z chmod -R 777 /cache-1

You should see Following message

Creating Swap Directories

.

.

After this, start SQUID by

squid -d1N

↓

Press Enttr , adn the issue this command to make sure squid is running

ps aux |grep squid

You will see few lines with squid name , if yes, congrats, your squid is up and running.

Also note that squid will not auto start by default when system reboots, you have to add an entry in

/etc/rc.local

just add following (before exit 0 command

squid

From Client end, point your browser to use your squid as proxy server and test any video.

.

.

TESTING YOUTUBE CACHING 🙂

↓

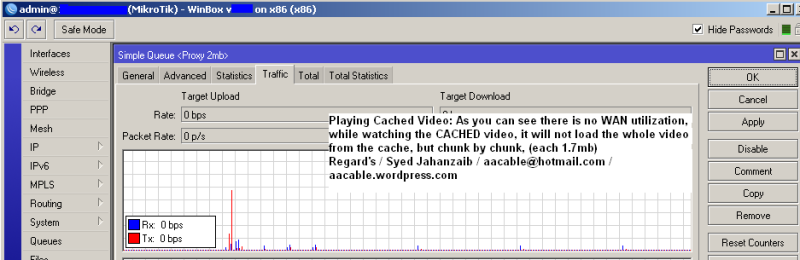

Now from test pc, open youtube and play any video, after it download completely, delete the browser cache, and play the same video again, This time it will be served from the cache. You can verify it by monitoring your WAN link utilization while playing the cached file.

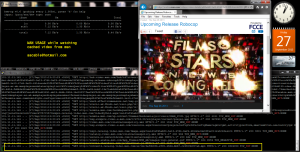

Look at the below WAN utilization graph, it was taken while watching the clip which is not in cache

WAN utilization of Proxy, While watching New Clip (Not in cache) ↑

.

.

.

.

.

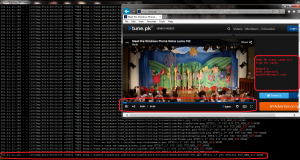

.

Now Look at the below WAN utilization graph, it was taken while watching the clip which is now in CACHE.

↓

↨

↑

WAN utilization of Proxy, While watching already cached Clip

.

.

↓

Playing Video, loaded from the cache chunk by chunk

It will load first chunk from the cache, if the user keep watching the clip, it will load next chunk , and will continue to do so.

↓

↓

↓

↓

↓

↓

More Cache HIT Example

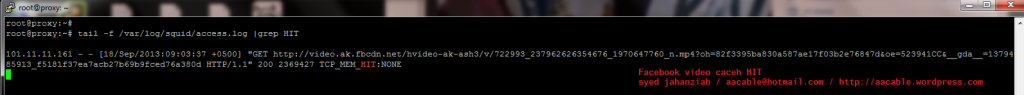

FACEBOOK VIDEO Cache HIT Example:

root@proxy:~# tail -f /var/log/squid/access.log |grep HIT

101.11.11.161 - - [18/Sep/2013:09:03:37 +0500] "GET http://video.ak.fbcdn.net/hvideo-ak-ash3/v/722993_237962626354676_1970647760_n.mp4?oh=82f3395ba830a587ae17f03b2e76847d&oe=523941CC&__gda__=1379485913_f5181f37ea7acb27b69b9fced76a380d HTTP/1.1" 200 2369427 TCP_MEM_HIT:NONE

.

.

.

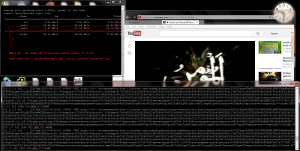

Youtube Vidoes Cache Hit Example

↓

You can monitor the CACHE TCP_HIT ENTRIES in squid logs, you can view them by

tail -f /var/log/squid/access.log | grep HIT

10.0.0.161 - - [18/Sep/2013:09:32:05 +0500] "GET http://r5---sn-gvnuxaxjvh-n8ve.c.youtube.com/videoplayback?algorithm=throttle-factor&burst=40&clen=2537620&cp=U0hWTlVLUV9NT0NONl9NRVVDOm9ReG8ybXFFU0hS&cpn=JOisEPFDiHzWwZDK&dur=159.730&expire=1379503285&factor=1.25&fexp=917000%2C912301%2C905611%2C934007%2C914098%2C916625%2C902533%2C924606%2C929117%2C929121%2C929906%2C929907%2C929922%2C929923%2C929127%2C929129%2C929131%2C929930%2C936403%2C925724%2C925726%2C936310%2C925720%2C925722%2C925718%2C925714%2C929917%2C906945%2C929933%2C929935%2C920302%2C906842%2C913428%2C919811%2C935020%2C935021%2C935704%2C932309%2C913563%2C919373%2C930803%2C908536%2C938701%2C931924%2C940501%2C936308%2C909549%2C901608%2C900816%2C912711%2C934507%2C907231%2C936312%2C906001&gir=yes&id=98d455f40d4132a5&ip=93.115.84.195&ipbits=8&itag=140&keepalive=yes&key=yt1&lmt=1370589022851995&ms=au&mt=1379478581&mv=m&range=2138112-2375679&ratebypass=yes&signature=C405B33844DEC9088DD546F2EDEC362737C776E1.5FDB10FD7B4F6C81F884F6FB2ABFDE067D2493A6&source=youtube&sparams=algorithm%2Cburst%2Cclen%2Ccp%2Cdur%2Cfactor%2Cgir%2Cid%2Cip%2Cipbits%2Citag%2Clmt%2Csource%2Cupn%2Cexpire&sver=3&upn=QZy7v7y0uxk HTTP/1.1" 302 1598 TCP_MEM_HIT:NONE 10.0.0.161 - - [18/Sep/2013:09:32:07 +0500] "GET http://r5---sn-gvnuxaxjvh-n8ve.c.youtube.com/videoplayback?algorithm=throttle-factor&burst=40&clen=5380615&cp=U0hWTlVLUV9NT0NONl9NRVVDOm9ReG8ybXFFU0hS&cpn=JOisEPFDiHzWwZDK&dur=159.059&expire=1379503285&factor=1.25&fexp=917000%2C912301%2C905611%2C934007%2C914098%2C916625%2C902533%2C924606%2C929117%2C929121%2C929906%2C929907%2C929922%2C929923%2C929127%2C929129%2C929131%2C929930%2C936403%2C925724%2C925726%2C936310%2C925720%2C925722%2C925718%2C925714%2C929917%2C906945%2C929933%2C929935%2C920302%2C906842%2C913428%2C919811%2C935020%2C935021%2C935704%2C932309%2C913563%2C919373%2C930803%2C908536%2C938701%2C931924%2C940501%2C936308%2C909549%2C901608%2C900816%2C912711%2C934507%2C907231%2C936312%2C906001&gir=yes&id=98d455f40d4132a5&ip=93.115.84.195&ipbits=8&itag=133&keepalive=yes&key=yt1&lmt=1370589028183073&ms=au&mt=1379478581&mv=m&range=4608000-5119999&ratebypass=yes&signature=8A1A558BF931AB3C8F58ADAF55B2488A88B9ADFD.108D982EB17E2F27C829F2521FF611808B4E8CAF&source=youtube&sparams=algorithm%2Cburst%2Cclen%2Ccp%2Cdur%2Cfactor%2Cgir%2Cid%2Cip%2Cipbits%2Citag%2Clmt%2Csource%2Cupn%2Cexpire&sver=3&upn=QZy7v7y0uxk HTTP/1.1" 302 1598 TCP_MEM_HIT:NONE 10.0.0.161 - - [18/Sep/2013:09:32:20 +0500] "GET http://r5---sn-gvnuxaxjvh-n8ve.c.youtube.com/videoplayback?algorithm=throttle-factor&burst=40&clen=2537620&cp=U0hWTlVLUV9NT0NONl9NRVVDOm9ReG8ybXFFU0hS&cpn=JOisEPFDiHzWwZDK&dur=159.730&expire=1379503285&factor=1.25&fexp=917000%2C912301%2C905611%2C934007%2C914098%2C916625%2C902533%2C924606%2C929117%2C929121%2C929906%2C929907%2C929922%2C929923%2C929127%2C929129%2C929131%2C929930%2C936403%2C925724%2C925726%2C936310%2C925720%2C925722%2C925718%2C925714%2C929917%2C906945%2C929933%2C929935%2C920302%2C906842%2C913428%2C919811%2C935020%2C935021%2C935704%2C932309%2C913563%2C919373%2C930803%2C908536%2C938701%2C931924%2C940501%2C936308%2C909549%2C901608%2C900816%2C912711%2C934507%2C907231%2C936312%2C906001&gir=yes&id=98d455f40d4132a5&ip=93.115.84.195&ipbits=8&itag=140&keepalive=yes&key=yt1&lmt=1370589022851995&ms=au&mt=1379478581&mv=m&range=2375680-2615295&ratebypass=yes&signature=C405B33844DEC9088DD546F2EDEC362737C776E1.5FDB10FD7B4F6C81F884F6FB2ABFDE067D2493A6&source=youtube&sparams=algorithm%2Cburst%2Cclen%2Ccp%2Cdur%2Cfactor%2Cgir%2Cid%2Cip%2Cipbits%2Citag%2Clmt%2Csource%2Cupn%2Cexpire&sver=3&upn=QZy7v7y0uxk HTTP/1.1" 302 1598 TCP_MEM_HIT:NONE 10.0.0.161 - - [18/Sep/2013:09:32:22 +0500] "GET http://r5---sn-gvnuxaxjvh-n8ve.c.youtube.com/videoplayback?algorithm=throttle-factor&burst=40&clen=5380615&cp=U0hWTlVLUV9NT0NONl9NRVVDOm9ReG8ybXFFU0hS&cpn=JOisEPFDiHzWwZDK&dur=159.059&expire=1379503285&factor=1.25&fexp=917000%2C912301%2C905611%2C934007%2C914098%2C916625%2C902533%2C924606%2C929117%2C929121%2C929906%2C929907%2C929922%2C929923%2C929127%2C929129%2C929131%2C929930%2C936403%2C925724%2C925726%2C936310%2C925720%2C925722%2C925718%2C925714%2C929917%2C906945%2C929933%2C929935%2C920302%2C906842%2C913428%2C919811%2C935020%2C935021%2C935704%2C932309%2C913563%2C919373%2C930803%2C908536%2C938701%2C931924%2C940501%2C936308%2C909549%2C901608%2C900816%2C912711%2C934507%2C907231%2C936312%2C906001&gir=yes&id=98d455f40d4132a5&ip=93.115.84.195&ipbits=8&itag=133&keepalive=yes&key=yt1&lmt=1370589028183073&ms=au&mt=1379478581&mv=m&range=5120000-5634047&ratebypass=yes&signature=8A1A558BF931AB3C8F58ADAF55B2488A88B9ADFD.108D982EB17E2F27C829F2521FF611808B4E8CAF&source=youtube&sparams=algorithm%2Cburst%2Cclen%2Ccp%2Cdur%2Cfactor%2Cgir%2Cid%2Cip%2Cipbits%2Citag%2Clmt%2Csource%2Cupn%2Cexpire&sver=3&upn=QZy7v7y0uxk HTTP/1.1" 302 1598 TCP_MEM_HIT:NONE

.

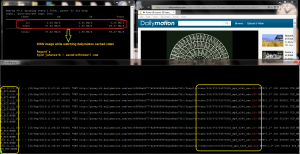

DAILYMOTION Videos Cache Hit Example

Videos that are not in cache ↓

101.11.11.161 - - [30/Sep/2013:10:45:25 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(1)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 932336 TCP_MISS:DIRECT 101.11.11.161 - - [30/Sep/2013:10:45:31 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(2)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 580913 TCP_MISS:DIRECT 101.11.11.161 - - [30/Sep/2013:10:45:41 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(3)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 655602 TCP_MISS:DIRECT 101.11.11.161 - - [30/Sep/2013:10:45:51 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(4)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 545532 TCP_MISS:DIRECT 101.11.11.161 - - [30/Sep/2013:10:46:02 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(5)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 645288 TCP_MISS:DIRECT ↑

↓

Videos CACHE_HIT that are in cache

101.11.11.161 - - [30/Sep/2013:11:07:26 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(1)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 932345 TCP_MEM_HIT:NONE 101.11.11.161 - - [30/Sep/2013:11:07:31 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(2)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 580922 TCP_MEM_HIT:NONE 101.11.11.161 - - [30/Sep/2013:11:07:43 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(3)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 655611 TCP_MEM_HIT:NONE 101.11.11.161 - - [30/Sep/2013:11:07:52 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(4)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 545541 TCP_MEM_HIT:NONE 101.11.11.161 - - [30/Sep/2013:11:08:03 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(5)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 645297 TCP_MEM_HIT:NONE 101.11.11.161 - - [30/Sep/2013:11:08:12 +0500] "GET http://proxy-62.dailymotion.com/sec(4f636a4f77762894959440a2a4bbc73f)/frag(6)/video/233/073/54370332_mp4_h264_aac.flv HTTP/1.1" 200 551354 TCP_MEM_HIT:NONE and some more 101.11.11.161 - - [01/Oct/2013:12:05:45 +0500] "GET http://vid2.ak.dmcdn.net/sec(bb78b176d5d55fa2a74cc2b3a7d9fc1a)/frag(1)/video/235/784/69487532_mp4_h264_aac_hq.flv HTTP/1.1" 200 460619 TCP_MISS:DIRECT 101.11.11.161 - - [01/Oct/2013:12:05:45 +0500] "GET http://vid2.ak.dmcdn.net/sec(d1bc558a82841a2be2990fb944e0d603)/frag(2)/video/235/784/69487532_mp4_h264_aac_ld.flv HTTP/1.1" 200 242336 TCP_MEM_HIT:NONE 101.11.11.161 - - [01/Oct/2013:12:05:54 +0500] "GET http://vid2.ak.dmcdn.net/sec(09b97b67e9cdc1d4f4e41f2ddf6d027b)/frag(3)/video/235/784/69487532_mp4_h264_aac.flv HTTP/1.1" 200 361845 TCP_MEM_HIT:NONE 101.11.11.161 - - [01/Oct/2013:12:06:26 +0500] "GET http://vid2.ak.dmcdn.net/sec(09b97b67e9cdc1d4f4e41f2ddf6d027b)/frag(4)/video/235/784/69487532_mp4_h264_aac.flv HTTP/1.1" 200 384313 TCP_MISS:DIRECT

.

AOL Videos Cache Hit Example

.

MSN Videos Cache Hit Example

101.11.11.161 - - [27/Sep/2013:13:03:31 +0500] "GET http://content4.catalog.video.msn.com/e2/ds/6af0b936-2895-48dd-bbb7-c26803b957ab.mp4 HTTP/1.1" 200 9349059 TCP_HIT:NONE

.

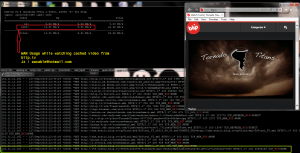

TUNE.PK Videos Cache Hit Example

↓

.

101.11.11.161 - - [19/Sep/2013:09:48:02 +0500] "GET http://storage4.tunefiles.com/files/videos/2013/06/26/1372274819407c1.flv HTTP/1.1" 200 5338729 TCP_HIT:NONE

.

BLIP.TV Videos Cache Hit Example

101.11.11.161 - - [27/Sep/2013:12:45:27 +0500] "GET http://j46.video2.blip.tv/6640012033790/TornadoTitans-Season3Episode10Twins738.m4v?ir=12035&sr=1835 HTTP/1.1" 200 20540163 TCP_HIT:NONE

.

.

APNIISP.COM Audio & Videos Cache Hit Example

↓

101.11.11.161 - - [27/Sep/2013:12:33:09 +0500] "GET http://songs.apniisp.com/videos/Qismat%20Apnay%20Haat%20Mein%20(Apniisp.Com).wmv HTTP/1.1" 200 94714 TCP_HIT:NONE 101.11.11.161 - - [27/Sep/2013:12:33:10 +0500] "GET http://songs.apniisp.com/videos/Qismat%20Apnay%20Haat%20Mein%20(Apniisp.Com).wmv HTTP/1.1" 304 333 TCP_IMS_HIT:NONE

↓

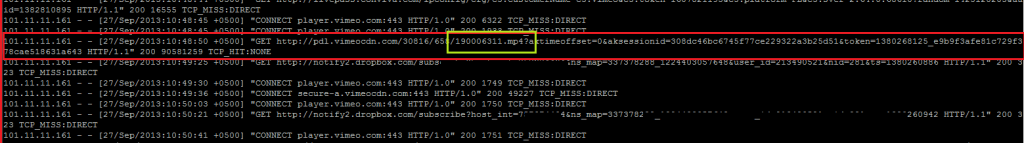

VIMEO Videos Cache Hit Example

101.11.11.161 - - [27/Sep/2013:10:48:50 +0500] "GET http://pdl.vimeocdn.com/30816/658/190006311.mp4?aktimeoffset=0&aksessionid=308dc46bc6745f77ce229322a3b25d51&token=1380268125_e9b9f3afe81c729f378cae518631a643 HTTP/1.1" 200 90581259 TCP_HIT:NONE

♥

.

.

Regard’s

Syed Jahanzaib

Dear bro how to solve this….

root@khan-desktop:~# squid -z

2012/01/20 01:17:52| Creating Swap Directories

FATAL: Failed to make swap directory /cache1: (13) Permission denied

Squid Cache (Version 2.7.STABLE7): Terminated abnormally.

CPU Usage: 0.000 seconds = 0.000 user + 0.000 sys

Maximum Resident Size: 3536 KB

Page faults with physical i/o: 0

wbr,

NASIR

LikeLike

Comment by NASIR — January 20, 2012 @ 12:19 AM

It means you didn’t read the instructions provided with the config file.

You have to assign proper permission to /cache1 dir so that it can be writable by squid proxy user.

If you are running Ubuntu, issue this command

mkdir /cache1

chown proxy:proxy /cache1

chmod 777 /cache1

Then run

squid -z

Then it will create cache dir successfully without any error. (provided you don’t have any config mistakes)

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — January 20, 2012 @ 10:45 AM

Syed i had the same problem like NASIR ,

i solved it by typing :

chmod -R 777 /cache1/

because it was trying to create a folder under /cache1/dir1/dir2 like this …

now it’s OK 🙂 thx for this gr8 tutorial .

I like ur blog and i’m visiting everyday for new tutorials …

LikeLike

Comment by Nori — January 20, 2012 @ 6:33 PM

Yeah, it was mentioned in the article too, but some times people are in too hurry to implement things that they skip few steps or do it blindly without modifying things according to distribution OS or there network.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — January 21, 2012 @ 9:11 PM

Dear Syed,

I followed your article and already implemented in our network. Within two days we are getting very good hit. Now I want to made a replica of the same configuration (without the cache) and setup another proxy server to distribute HTTP load of various packages into specific proxy server. As earlier done for proxy1, I added a mangle rule in Mikrotik 3.30 router, and then marked DSCP = 12 for zph_local 0x30. It works brilliantly for users who are redirected to (proxy1) and getting cache from (proxy1). Mikrotik marks those packets and send cache_hit data in LAN speed. But for users who are in proxy2, getting cache data from proxy1, I can see in the access.log that I got hit from SIBLING_HIT, and therefore I set zph_sibling 0x30 to mark those packets in mikrotik, but I don’t get LAN speed.

I believe something is wrong is the squid …

Do you have any suggestions?

LikeLike

Comment by Saiful Alam — January 22, 2012 @ 3:13 AM

Try removing tcp_outgoing_tos

probably it is replacing the ZPH

values with 0x30.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — September 23, 2013 @ 8:36 AM

I will try it in my lab.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — September 23, 2013 @ 8:36 AM

ya its working fine….. thanks Syed Jahanzaib. you are really great…

keep it up..

wish you all the best..

wbr,

Muhammad Nasir Javed

LikeLike

Comment by Muhammad Nasir Javed — January 26, 2012 @ 11:35 AM

Assalam-o-Alaikum

Shah gee My name is Muhammad Imran Khan

I read you blog and successfully I had configured my squid 2.7 also test it

working fine

my question is how can i enable downloading cache in squid

Kindly help me My e-mail is

imran_niazi_2004@yahoo.com

Regards:)

LikeLike

Comment by Muhammad Imran Khan — February 1, 2012 @ 5:32 PM

If you are using good refresh pattern, squid by will cache all contents those are cacheable.

However if you download via IDM or any other download accelerator, it will not be cached by default. once it is downloaded by browser , other user can download it from the cache eitehr using browse or IDM download

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 2, 2012 @ 10:40 AM

What do you thing would be a good hardware to use as squid if we have a 200users and almost all the month trafic that the users uses with just 1.7TB DOWNLOAD and 400GB UPload within a month .

But on the server excluding the .flv files and .mp4 files and files that are bigger than 30MB .

In this case what hardware do u prefer to me to use it.

( b.th.w i would like to use any dell or hp computer , can u tell me which one can i use but without needing to get to much energy like servers with 2800W because sometimes i need to work with invertors without having energy on our base. I mean which model of computer do u prefer …)

LikeLike

Comment by Nori — February 2, 2012 @ 10:03 PM

Any powerful server usually requires higher power consumption.

For about 200 Users, Any Dual core with 3 Ghz or Quad core will do more then enough.

The main focus should be on RAM and HDD, CPU is less concerned issue.

Adding 4 or 16 GB is an good idea to get good performance from squid. Add 2 HDD, one for OS and second dedicated for the CACHE.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 2, 2012 @ 10:59 PM

Hello i have a problem, and I don’t know what’s wrong, can you help me, difference from tour config is only IPaddress, and different dir for cache

here is whats happening::::

2012/02/06 00:48:26| Starting Squid Cache version 2.7.STABLE9 for x86_64-pc-linux-gnu…

2012/02/06 00:48:26| Process ID 8837

2012/02/06 00:48:26| With 1024 file descriptors available

2012/02/06 00:48:26| Using epoll for the IO loop

2012/02/06 00:48:26| Performing DNS Tests…

2012/02/06 00:48:26| Successful DNS name lookup tests…

2012/02/06 00:48:26| DNS Socket created at 0.0.0.0, port 54307, FD 6

2012/02/06 00:48:26| Adding domain enet.rs from /etc/resolv.conf

2012/02/06 00:48:26| Adding nameserver 192.168.2.129 from /etc/resolv.conf

2012/02/06 00:48:26| helperOpenServers: Starting 7 ‘storeurl.pl’ processes

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

2012/02/06 00:48:26| User-Agent logging is disabled.

2012/02/06 00:48:26| Referer logging is disabled.

2012/02/06 00:48:26| logfileOpen: opening log /var/log/squid/access.log

2012/02/06 00:48:26| logfileOpen: opening log /var/log/squid/access.log

2012/02/06 00:48:26| Swap maxSize 174080000 + 1048576 KB, estimated 13471428 objects

2012/02/06 00:48:26| Target number of buckets: 673571

2012/02/06 00:48:26| Using 1048576 Store buckets

2012/02/06 00:48:26| Max Mem size: 1048576 KB

2012/02/06 00:48:26| Max Swap size: 174080000 KB

2012/02/06 00:48:26| Local cache digest enabled; rebuild/rewrite every 3600/3600 sec

2012/02/06 00:48:26| logfileOpen: opening log /var/log/squid/store.log

2012/02/06 00:48:26| Rebuilding storage in /var/spool/squid (DIRTY)

2012/02/06 00:48:26| Using Least Load store dir selection

2012/02/06 00:48:26| Current Directory is /var/spool

2012/02/06 00:48:26| Loaded Icons.

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

2012/02/06 00:48:26| Accepting transparently proxied HTTP connections at 192.168.1.202, port 3128, FD 21.

2012/02/06 00:48:26| HTCP Disabled.

2012/02/06 00:48:26| WCCP Disabled.

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

2012/02/06 00:48:26| Ready to serve requests.

2012/02/06 00:48:26| WARNING: store_rewriter #1 (FD 7) exited

2012/02/06 00:48:26| WARNING: store_rewriter #2 (FD 8) exited

2012/02/06 00:48:26| WARNING: store_rewriter #3 (FD 9) exited

2012/02/06 00:48:26| WARNING: store_rewriter #4 (FD 10) exited

2012/02/06 00:48:26| Too few store_rewriter processes are running

2012/02/06 00:48:26| ALERT: setgid: (1) Operation not permitted

FATAL: The store_rewriter helpers are crashing too rapidly, need help!

i really don’t know what to do anymore… Thanks

LikeLike

Comment by nenad — February 6, 2012 @ 4:52 AM

There might be some problem with the storeurl.pl content, either its not copy pasted correctly.

First try without storeurl.pl

IF it works ok , then Try to create storeurl.pl from following URL.

https://aacable.wordpress.com/2012/01/11/howto-cache-youtube-with-squid-lusca-and-bypass-cached-videos-from-mikrotik-queue/

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 6, 2012 @ 8:53 AM

Thanks, it was problem with storeurl.pl

Now squid starts, and another thing i have to comment out log parameters, because squid don’t understand it…

Now Ill test it…

Thanks again

LikeLike

Comment by nenad — February 6, 2012 @ 11:43 PM

Another very strange thing…

When using LUSCA, while system runs everything is ok, youtube is caching… but after I reboot ubuntu, i have same error like i mention above, then i create again storeurl.pl and lusca is starting normal… Do you maybe know why this is happening??? And ofc because of this problem, lusca cannot start on boot… Do you have any idea

Thanks for helping me!

LikeLike

Comment by nenad — February 7, 2012 @ 5:14 AM

I have updated the squid.conf, It was wordpress who changed the code with special characters, , It really annoys me sometimes 🙂

any how check it again,

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 7, 2012 @ 9:23 AM

and another thing, with this log format how to make sarg to create report….

Thanks

LikeLike

Comment by nenad — February 7, 2012 @ 6:47 AM

I have used this log format with SARG and it works fine.

Sometimes WORDPRESS really messes with the codes pasted, sorry for inconvenience , I have re pasted the config, check it again.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 7, 2012 @ 9:16 AM

SARG CONFIG

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 7, 2012 @ 9:21 AM

same thing, when rebooting ubuntu lusca wont start, manual starting result errors, and then just re create storeurl.pl and it starts normaly…

I didnt manage to get sarg create log

and yes another thing, i get reciving this message : clientNatLookup: NF getsockopt(SO_ORIGINAL_DST) failed: (92) Protocol not available

I dont use NAT for proxy, ubuntu knows all routes

Thanks a lot man…

Best regards

LikeLike

Comment by nenad — February 7, 2012 @ 4:35 PM

This message means that Squid received a request but the kernel has no NAT

tracking information about it’s IP address.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 8, 2012 @ 12:10 AM

my networks are 172.16.x.x / 255.255.0.0 and from that address Squid gets requests, and i bootup and in active configuration i have ip route on eth1 address 172.16.0.0 mask 255.255.0.0 gateway x.x.x.x I think that is enough but maybe not…

LikeLike

Comment by nenad — February 8, 2012 @ 12:15 AM

hi any news about youtube error?

LikeLike

Comment by tom — February 10, 2012 @ 9:47 AM

chuddy said:create a redirect that will remove the “&range=xxx-xxx”

LikeLike

Comment by tom — February 10, 2012 @ 9:48 AM

hmm not able to understand, an example would be useful.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 10, 2012 @ 10:23 AM

i am using ubuntu-11.10 i386

i implemented this config ( i had to remove all the ” ” in your code, because when i copy it it shows up a lot of ‘s such as: refresh_pattern -i \.(pp(t?x)|s|t)|pdf|rtf|wax|wm(a|v)|wmx|wpl|cb(r|z|t)|xl(s?x)|do(c?x)|flv|x-flv) 43200 80% 43200 ignore-no-cache override-expire override-lastmod reload-into-ims

refresh_pattern -i (/cgi-bin/|\?) 0 0% 0 )

Anyway, squid is now running and seems to be working, BUT it does not seem to be caching the youtube videos.

With one video i got a 500 internal server error and the others just does not cache, even though i see the following:

root@eesa-server:~# tail -f /var/log/squid/access.log | grep HIT

192.168.1.45 – – [19/Feb/2012:16:47:11 +0200] “GET http://o-o.preferred.mweb-jnb1.v23.lscache4.c.youtube.com/generate_204? HTTP/1.1″ 204 274 TCP_NEGATIVE_HIT:NONE

192.168.1.45 – – [19/Feb/2012:16:47:37 +0200] “GET http://o-o.preferred.mweb-jnb1.v23.lscache4.c.youtube.com/generate_204? HTTP/1.1″ 204 274 TCP_NEGATIVE_HIT:NONE

192.168.1.45 – – [19/Feb/2012:16:47:39 +0200] “GET http://clients1.google.com/generate_204 HTTP/1.1″ 204 274 TCP_NEGATIVE_HIT:NONE

192.168.1.45 – – [19/Feb/2012:16:47:53 +0200] “GET http://www.youtube.com/watch? HTTP/1.1″ 500 3143 TCP_NEGATIVE_HIT:NONE

What could be the problem?

My server ip: 192.168.1.28 and my ip is 192.168.1.45 ( i am testing it on a lan fist)

LikeLike

Comment by Eesa — February 19, 2012 @ 7:52 PM

Hmm I have tested this config at various networks and it works fine.

To copy script , you will see icon at script like this “” , click on it, and new window will appear and you will see RAW code.

HIT shows your video are caching fine.

Have you setup any Queue for speed limitation ?

At ubuntu box, open terminal and issue following command

ps aux | grep squid

Check if you are able to see 5-7 storeurl.pl entries

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 20, 2012 @ 9:34 AM

I used the source button and copied and repasted the code into both the squid.conf and the storeurl, i restarted squid but still no caching of youtube is taking place

Here is the output of ps aux | grep squid

root@eesa-server:/home/eesa# ps aux | grep squid

proxy 2105 0.3 1.3 13884 6780 ? Ssl 20:01 0:00 /usr/sbin/squid -N -D

proxy 2106 0.0 0.2 3948 1444 ? Ss 20:01 0:00 /usr/bin/perl /etc/squid/storeurl.pl

proxy 2107 0.0 0.2 3948 1440 ? Ss 20:01 0:00 /usr/bin/perl /etc/squid/storeurl.pl

proxy 2108 0.0 0.2 3948 1444 ? Ss 20:01 0:00 /usr/bin/perl /etc/squid/storeurl.pl

proxy 2109 0.0 0.2 3948 1440 ? Ss 20:01 0:00 /usr/bin/perl /etc/squid/storeurl.pl

proxy 2110 0.0 0.2 3948 1440 ? Ss 20:01 0:00 /usr/bin/perl /etc/squid/storeurl.pl

proxy 2111 0.0 0.2 3948 1440 ? Ss 20:01 0:00 /usr/bin/perl /etc/squid/storeurl.pl

proxy 2112 0.0 0.2 3948 1444 ? Ss 20:01 0:00 /usr/bin/perl /etc/squid/storeurl.pl

root@eesa-server:/home/eesa#

I use gedit instead of nano to edit the files, could that cause an issue?

Here is the exact output of what i do

( i get a few Gtk-WARNINGs when using gedit but i dont think they

are of any importance as i always see them, they look like this:

(gedit:1795): Gtk-WARNING **: Attempting to store changes into `/root/.local/share/recently-used.xbel’, but failed: Failed to create file ‘/root/.local/share/recently-used.xbel.BS4CAW’: No such file or directory)

eesa@eesa-server:~$ sudo su

[sudo] password for eesa:

root@eesa-server:/home/eesa# gedit /etc/squid/squid.conf

then i paste config

root@eesa-server:/home/eesa# gedit /etc/squid/storeurl.pl

then i paste config

—

but still i can see that its not serving the video from the cache, because i see my internet is being used and the video is loading at internet speed, not LAN speed.

here is the output of: tail -f /var/log/squid/access.log | grep HIT when i open the same video over and over again

root@eesa-server:/home/eesa# tail -f /var/log/squid/access.log | grep HIT

192.168.1.45 – – [20/Feb/2012:20:11:09 +0200] “GET http://clients1.google.com/generate_204 HTTP/1.1″ 204 273 TCP_NEGATIVE_HIT:NONE

192.168.1.45 – – [20/Feb/2012:20:11:36 +0200] “GET http://clients1.google.com/generate_204 HTTP/1.1″ 204 274 TCP_NEGATIVE_HIT:NONE

192.168.1.45 – – [20/Feb/2012:20:11:36 +0200] “GET http://o-o.preferred.mweb-jnb1.v18.lscache3.c.youtube.com/generate_204? HTTP/1.1″ 204 274 TCP_NEGATIVE_HIT:NONE

192.168.1.45 – – [20/Feb/2012:20:11:37 +0200] “GET http://clients1.google.com/generate_204 HTTP/1.1″ 204 274 TCP_NEGATIVE_HIT:NONE

192.168.1.45 – – [20/Feb/2012:20:11:56 +0200] “GET http://o-o.preferred.mweb-jnb1.v18.lscache3.c.youtube.com/generate_204? HTTP/1.1″ 204 274 TCP_NEGATIVE_HIT:NONE

192.168.1.45 – – [20/Feb/2012:20:11:59 +0200] “GET http://clients1.google.com/generate_204 HTTP/1.1″ 204 274 TCP_NEGATIVE_HIT:NONE

i really cant understand it, should i downgrade the ubuntu version?

LikeLike

Comment by Eesa — February 20, 2012 @ 11:14 PM

You don’t need to downgrade your ubuntu.

I have tested this configuration at various places with always success ratio.

Any how you may try this and check the results.

https://aacable.wordpress.com/2012/01/11/howto-cache-youtube-with-squid-lusca-and-bypass-cached-videos-from-mikrotik-queue/

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 21, 2012 @ 9:38 AM

Oh i forgot to mention, i dont have any mikrotik queues set, this is in a LAN environment for testing purpose

LikeLike

Comment by Eesa — February 20, 2012 @ 11:20 PM

as salaam alaikum All i follow all steps but its not caching any think place help me

LikeLike

Comment by ali — February 23, 2012 @ 3:22 AM

Have you tried this ?

https://aacable.wordpress.com/2012/01/11/howto-cache-youtube-with-squid-lusca-and-bypass-cached-videos-from-mikrotik-queue/

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 23, 2012 @ 9:09 AM

When you say” Make sure you have setup proper internet connection in Ubuntu BOX.” do you mean anything specific?

or is it enough that i can access the internet from the ubuntu box?

LikeLike

Comment by Eesa — February 23, 2012 @ 1:11 PM

by proper internet connection, means just simple internet access , nothing specific.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 23, 2012 @ 1:38 PM

sac int one caching http and other formats files but youtube video not caching, and not working on transparent mode ?

LikeLike

Comment by ali — February 24, 2012 @ 3:27 AM

I finally got it working on Ubuntu 11

i tried the above but it never work, then i tried this: https://aacable.wordpress.com/2012/01/11/howto-cache-youtube-with-squid-lusca-and-bypass-cached-videos-from-mikrotik-queue/

that also never worked

it was showing HIT but not serving the videos from the cache

Then i tried this: http://code.google.com/p/proxy-ku/downloads/detail?name=LUSCA_FMI.tar.gz

and that worked!

now i’m going to try to implement it behind the mikrotik and exempt the squid HIT traffic from the queues.

JazakAllah

LikeLike

Comment by Eesa — February 25, 2012 @ 11:48 PM

Great. Good to heard you finally made it work 🙂

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — February 27, 2012 @ 8:58 AM

hy syad ,

i have a problem that

012/02/29 20:29:24| parseConfigFile: squid.conf:148 unrecognized: ‘storeurl_rewrite’

then storeurl_rewrite c:\squid\etc\storeurl.conf but real command is storeurl_rewrite_program c:\squid\etc\storeurl.conf

it work fine audio and proxy cache making in windows but vedio cache is not working give me solution about this problem.

LikeLike

Comment by majid Shahzad — February 29, 2012 @ 8:36 PM

I dont have any idea regarding windows base squid,

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — March 1, 2012 @ 9:42 AM

I belive many of the error where problems with negative-ttl (having so negative_hits only)… your others tutorials have negative-ttl=0 and when i used their squid config it cached videos to disk… I was geting cache only from RAM (or ISP lol) and was geting problems with my ISP youtube cache… Sorry the bad english D= and tanks the tutorials =)

LikeLike

Comment by Romulo — March 3, 2012 @ 12:38 PM

Aoa, Brother how about the squid traffic it’s encrypted or not coz I had problem coz its traffic can be catch with sniffer software’s. Can you guide me how can I secure it?

LikeLike

Comment by Kamran Rashid — April 1, 2012 @ 6:15 PM

the squid.conf and storeurl.pl are working properly so far.

great thanks to you….

the squid working good so far on ubuntu 11.10

any problem or error may raise will post on this page

even though 1 had 3 times re-install ubuntu 11.10 on my computer….hehehe

following your step one by one

very useful to save bandwidth for video which often opening by users

LikeLike

Comment by Ma'el — April 5, 2012 @ 11:19 PM

Yes in most cases, its very useful.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — April 6, 2012 @ 9:05 AM

How to use on IpCop?

LikeLike

Comment by Sigit Sugiharto — April 8, 2012 @ 5:13 AM

I have no experience in IP_COP

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — April 8, 2012 @ 10:38 PM

how to set squid to login to mikrotik hotspot automaticaly?

LikeLike

Comment by Sigit Sugiharto — April 9, 2012 @ 5:00 PM

You can use MAC authentication to transparently allow squid to go through hotspot bypassing login page.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — April 10, 2012 @ 8:56 AM

I’m successfully install on IP_COP 1.4.21 thank you..

LikeLike

Comment by Sigit Sugiharto — April 15, 2012 @ 8:23 AM

how to cache webm exstension in yotube

LikeLike

Comment by bintangnet2011 — April 9, 2012 @ 7:58 PM