Updated Version of squid which cache youtube and many other contents. read following

https://aacable.wordpress.com/2012/01/19/youtube-caching-with-squid-2-7-using-storeurl-pl/

Advantages of Youtube Caching !!!

In most part of the world, bandwidth is very expensive, therefore it is (in some scenarios) very useful to Cache Youtube videos or any other flash videos, so if one of user downloads video / flash file , why again the same user or other user can’t download the same file from the CACHE, why he sucking the internet pipe for same content again n again?

Peoples on same LAN ,sometimes watch similar videos. If I put some youtube video link on on FACEBOOK, TWITTER or likewise , and all my friend will watch that video and that particular video gets viewed many times in few hours. Usually the videos are shared over facebook or other social networking sites so the chances are high for multiple hits per popular videos for my LAN users / friends. [syed.jahanzaib]

This is the reason why I wrote this article.

Disadvantages of Youtube Caching !!!

The chances, that another user will watch the same video, is really slim. if I search for something specific on youtube, i get more then hundreds of search results for same video. What is the chance that another user will search for the same thing, and will click on the same link / result? Youtube hosts more than 10 million videos. Which is too much to cache anyway. You need lot of space to cache videos. Also accordingly you will be needing ultra modern fast hardware with tons of SPACE to handle such kind of cache giant. anyhow Try it

AFAIK you are not supposed to cache youtube videos, youtube don’t like it. I don’t understand why. Probably because their ranking mechanism relies on views, and possibly completed views, which wouldn’t be measurable if the content was served from a local cache.

After unsuccessful struggling with storeurl.pl method , I was searching for alternate method to cache youtube videos. Finally I found ruby base method using Nginx to cache YT. Using this method I was able to cache all Youtube videos almost perfectly. (not 100%, but it works fine in most cases with some modification.I am sure there will be some improvement in near future).

Updated: 24thth August, 2012

Thanks to Mr. Eliezer Croitoru & Mr.Christian Loth & others for there kind guidance.

Following components were used in this guide.

Proxy Server Configuration:

Ubuntu Desktop 10.4

Nginix version: nginx/0.7.65

Squid Cache: Version 2.7.STABLE7

Client Configuration for testing videos:

Windows XP with Internet Explorer 6

Windows 7 with Internet Explorer 8

Lets start with the Proxy Server Configuration:

1) Update Ubuntu

First install Ubuntu, After installation, configure its networking components, then update it by following command

apt-get install update

2) Install SSH Server [Optional]

Now install SSH server so that you can manage your server remotely using PUTTY or any other ssh tool.

apt-get install openssh-server

3) Install Squid Server

Now install Squid Server by following command

apt-get install squid

[This will install squid 2.7 by default]

Now edit squid configuration files by using following command

nano /etc/squid/squid.conf

Remove all lines and paste the following data

# SQUID 2.7/ Nginx TEST CONFIG FILE # Email: aacable@hotmail.com # Web : https://aacable.wordpress.com # PORT and Transparent Option http_port 8080 transparent server_http11 on icp_port 0 # Cache is set to 5GB in this example (zaib) store_dir_select_algorithm round-robin cache_dir aufs /cache1 5000 16 256 cache_replacement_policy heap LFUDA memory_replacement_policy heap LFUDA # If you want to enable DATE time n SQUID Logs,use following emulate_httpd_log on logformat squid %tl %6tr %>a %Ss/%03Hs %<st %rm %ru %un %Sh/%<A %mt log_fqdn off # How much days to keep users access web logs # You need to rotate your log files with a cron job. For example: # 0 0 * * * /usr/local/squid/bin/squid -k rotate logfile_rotate 14 debug_options ALL,1 cache_access_log /var/log/squid/access.log cache_log /var/log/squid/cache.log cache_store_log /var/log/squid/store.log #[zaib] I used DNSAMSQ service for fast dns resolving #so install by using "apt-get install dnsmasq" first dns_nameservers 127.0.0.1 221.132.112.8 #ACL Section acl all src 0.0.0.0/0.0.0.0 acl manager proto cache_object acl localhost src 127.0.0.1/255.255.255.255 acl to_localhost dst 127.0.0.0/8 acl SSL_ports port 443 563 # https, snews acl SSL_ports port 873 # rsync acl Safe_ports port 80 # http acl Safe_ports port 21 # ftp acl Safe_ports port 443 563 # https, snews acl Safe_ports port 70 # gopher acl Safe_ports port 210 # wais acl Safe_ports port 1025-65535 # unregistered ports acl Safe_ports port 280 # http-mgmt acl Safe_ports port 488 # gss-http acl Safe_ports port 591 # filemaker acl Safe_ports port 777 # multiling http acl Safe_ports port 631 # cups acl Safe_ports port 873 # rsync acl Safe_ports port 901 # SWAT acl purge method PURGE acl CONNECT method CONNECT http_access allow manager localhost http_access deny manager http_access allow purge localhost http_access deny purge http_access deny !Safe_ports http_access deny CONNECT !SSL_ports http_access allow localhost http_access allow all http_reply_access allow all icp_access allow all #[zaib]I used UBUNTU so user is proxy, in FEDORA you may use use squid cache_effective_user proxy cache_effective_group proxy cache_mgr aacable@hotmail.com visible_hostname proxy.aacable.net unique_hostname aacable@hotmail.com cache_mem 8 MB minimum_object_size 0 bytes maximum_object_size 100 MB maximum_object_size_in_memory 128 KB refresh_pattern ^ftp: 1440 20% 10080 refresh_pattern ^gopher: 1440 0% 1440 refresh_pattern -i (/cgi-bin/|\?) 0 0% 0 refresh_pattern (Release|Packages(.gz)*)$ 0 20% 2880 refresh_pattern . 0 50% 4320 acl apache rep_header Server ^Apache broken_vary_encoding allow apache # Youtube Cache Section [zaib] url_rewrite_program /etc/nginx/nginx.rb url_rewrite_host_header off acl youtube_videos url_regex -i ^http://[^/]+\.youtube\.com/videoplayback\? acl range_request req_header Range . acl begin_param url_regex -i [?&]begin= acl id_param url_regex -i [?&]id= acl itag_param url_regex -i [?&]itag= acl sver3_param url_regex -i [?&]sver=3 cache_peer 127.0.0.1 parent 8081 0 proxy-only no-query connect-timeout=10 cache_peer_access 127.0.0.1 allow youtube_videos id_param itag_param sver3_param !begin_param !range_request cache_peer_access 127.0.0.1 deny all

Save & Exit.

4) Install Nginx

Now install Nginix by

apt-get install nginx

Now edit its config file by using following command

nano /etc/nginx/nginx.conf

Remove all lines and paste the following data

# This config file is not written by me, [syed.jahanzaib]

# My Email address is inserted Just for tracking purposes

# For more info, visit http://code.google.com/p/youtube-cache/

# Syed Jahanzaib / aacable [at] hotmail.com

user www-data;

worker_processes 4;

pid /var/run/nginx.pid;

events {

worker_connections 768;

}

http {

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

gzip on;

gzip_static on;

gzip_comp_level 6;

gzip_disable .msie6.;

gzip_vary on;

gzip_types text/plain text/css text/xml text/javascript application/json application/x-javascript application/xml application/xml+rss;

gzip_proxied expired no-cache no-store private auth;

gzip_buffers 16 8k;

gzip_http_version 1.1;

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/sites-enabled/*;

# starting youtube section

server {

listen 127.0.0.1:8081;

location / {

root /usr/local/www/nginx_cache/files;

#try_files "/id=$arg_id.itag=$arg_itag" @proxy_youtube; # Old one

#try_files "$uri" "/id=$arg_id.itag=$arg_itag.flv" "/id=$arg_id-range=$arg_range.itag=$arg_itag.flv" @proxy_youtube; #old2

try_files "/id=$arg_id.itag=$arg_itag.range=$arg_range.algo=$arg_algorithm" @proxy_youtube;

}

location @proxy_youtube {

resolver 221.132.112.8;

proxy_pass http://$host$request_uri;

proxy_temp_path "/usr/local/www/nginx_cache/tmp";

#proxy_store "/usr/local/www/nginx_cache/files/id=$arg_id.itag=$arg_itag"; # Old 1

proxy_store "/usr/local/www/nginx_cache/files/id=$arg_id.itag=$arg_itag.range=$arg_range.algo=$arg_algorithm";

proxy_ignore_client_abort off;

proxy_method GET;

proxy_set_header X-YouTube-Cache "aacable@hotmail.com";

proxy_set_header Accept "video/*";

proxy_set_header User-Agent "YouTube Cacher (nginx)";

proxy_set_header Accept-Encoding "";

proxy_set_header Accept-Language "";

proxy_set_header Accept-Charset "";

proxy_set_header Cache-Control "";}

}

}

Save & Exit.

Now Create directories to hold cache files

mkdir /usr/local/www

mkdir /usr/local/www/nginx_cache

mkdir /usr/local/www/nginx_cache/tmp

mkdir /usr/local/www/nginx_cache/files

chown www-data /usr/local/www/nginx_cache/files/ -Rf

Now create nginx .rb file

touch /etc/nginx/nginx.rb

chmod 755 /etc/nginx/nginx.rb

nano /etc/nginx/nginx.rb

Paste the following data in this newly created file

#!/usr/bin/env ruby1.8

# This script is not written by me,

# My Email address is inserted Just for tracking purposes

# For more info, visit http://code.google.com/p/youtube-cache/

# Syed Jahanzaib / aacable [at] hotmail.com

# url_rewrite_program <path>/nginx.rb

# url_rewrite_host_header off

require "syslog"

require "base64"

class SquidRequest

attr_accessor :url, :user

attr_reader :client_ip, :method

def method=(s)

@method = s.downcase

end

def client_ip=(s)

@client_ip = s.split('/').first

end

end

def read_requests

# URL <SP> client_ip "/" fqdn <SP> user <SP> method [<SP> kvpairs]<NL>

STDIN.each_line do |ln|

r = SquidRequest.new

r.url, r.client_ip, r.user, r.method, *dummy = ln.rstrip.split(' ')

(STDOUT << "#{yield r}\n").flush

end

end

def log(msg)

Syslog.log(Syslog::LOG_ERR, "%s", msg)

end

def main

Syslog.open('nginx.rb', Syslog::LOG_PID)

log("Started")

read_requests do |r|

if r.method == 'get' && r.url !~ /[?&]begin=/ && r.url =~ %r{\Ahttp://[^/]+\.youtube\.com/(videoplayback\?.*)\z}

log("YouTube Video [#{r.url}].")

"http://127.0.0.1:8081/#{$1}"

else

r.url

end

end

end

main

Save & Exit.

5) Install RUBY

What is RUBY?

Ruby is a dynamic, open source programming language with a focus on simplicity and productivity. It has an elegant syntax that is natural to read and easy to write. [syed.jahanzaib]

Now install RUBY by following command

apt-get install ruby

6) Configure Squid Cache DIR and Permissions

Now create cache dir and assign proper permission to proxy user

mkdir /cache1

chown proxy:proxy /cache1

chmod -R 777 /cache1

Now initialize squid cache directories by

squid -z

You should see Following message

Creating Swap Directories

7) Finally Start/restart SQUID & Nginx

service squid start

service nginx restart

Now from test pc, open youtube and play any video, after it download completely, delete the browser cache, and play the same video again, This time it will be served from the cache. You can verify it by monitoring your WAN link utilization while playing the cached file.

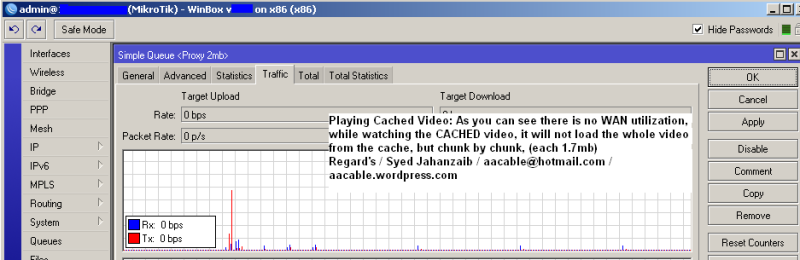

Look at the below WAN utilization graph, it was taken while watching the clip which is not in cache

Now Look at the below WAN utilization graph, it was taken while watching the clip which is now in CACHE.

It will load first chunk from the cache, if the user keep watching the clip, it will load next chunk at the end of first chunk, and will continue to do so.

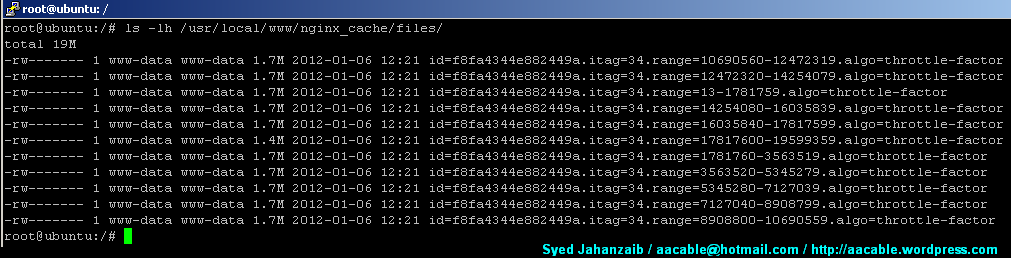

Video cache files can be found in following locations.

/usr/local/www/nginx_cache/files

e.g:

ls -lh /usr/local/www/nginx_cache/files

The above file shows the clip is in 360p quality, and the length of the clip is 5:54 Seconds.

itag=34 shows the video quality is 360p.

Credits: Thanks to Mr. Eliezer Croitoru & Mr.Christian Loth & others for there kind guidance.

Find files that have not been accessed from x days. Useful to delete old cache files that have not been accessed since x days.

find /usr/local/www/nginx_cache/files -atime +30 -type f

Regard’s

Syed Jahanzaib

What about “an error occured” ?did you get any solution for it or not,i face same problem.

Adeel Ahmed

http://www.facebook.com/wifitech

LikeLike

Comment by adeelkml — August 13, 2012 @ 1:28 PM

Have u tried 240p quality ?

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 13, 2012 @ 6:37 PM

yeah it works fine at 240p….do we need to make changes to cache other video sites?

can we use storeurl.pl and Nginx together?

LikeLike

Comment by adeelkml — August 13, 2012 @ 10:45 PM

i used 240p,480p and 720p these all works fine…..

i use Smart Video Firefox Plugin which forces the user to watch video in desired quality every time…..It automatically select your desired quality on each n every video.

http:// youtu.be/ 5BejfxzXqMw

LikeLike

Comment by adeelkml — August 17, 2012 @ 5:31 AM

salam janab youtube cache kam to krta hay mgr youtube hq video open nahi hoti err aa jata hay

LikeLike

Comment by M.Tahir Shafiq — August 25, 2012 @ 7:07 PM

There are some limitations and restrictions using this method. I will post more findings about it next week,

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 26, 2012 @ 10:02 AM

thanks for new YT cache

but in squid.conf there is no ZPH directives to mark cache content, so that it can later pick by Mikrotik.

Did you find the solution ???

Did Nginx method cache Windows Update , AntiVirus Update and (Google,MSN,CNN,Metacafe,etc) Videos ???

LikeLike

Comment by Ahmed Morgan — August 13, 2012 @ 4:43 PM

This method is just an example for caching youtube videos. You can integrate it to cache other dynamic content also along with squid I will do some more testing and will post the updates whenever I will get time. The problem is I have a job related to Microsoft environment and I only get 1 free day in 10-15 days to do my personnel testing, so its hard to manage time for R&D.

🙂

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 13, 2012 @ 6:36 PM

Hello Mr.Syed

I test Squid + Nginx for caching , but I can’t use it becuase every video open give me “an error occured” or when video playing show this error

Did you solve it ????

LikeLike

Comment by Ahmed Morgan — August 13, 2012 @ 5:22 PM

Have you tried watching videos in 240p ?

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 13, 2012 @ 6:34 PM

thanks for new Solution but the method cache all web sites or not?

any videos will be cache like xnxx ,redtube ,tube8 ,facebook videos ?

and download files will be cache ?

thanks .

LikeLike

Comment by aa — August 13, 2012 @ 6:01 PM

This method is just an example for caching youtube videos. You can integrate it to cache otehr dynamic content also along with squid.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 13, 2012 @ 6:34 PM

Dear sir,

I followed all you above mentioned steps. Only 240p videos working fine. 360p giving alot pain means random errors like stuck at 39 second, error occurred, cache video play as black screen.

For 240p format working flawless. Kindly do something about 360p.

Thank you

LikeLike

Comment by Saqib — August 14, 2012 @ 7:41 AM

i will install and check thanks

LikeLike

Comment by Hussien — August 14, 2012 @ 7:41 AM

what about

cache_dir aufs /cache1 5000 16 256

i have 6 hdd every one 2TB

LikeLike

Comment by Hussien — August 14, 2012 @ 9:07 AM

Is this True ????

cache_dir

This is where you set the directories you will be using. You should have already mkreiserfs’d your cache directory partitions, so you’ll have an easy time deciding the values here. First, you will want to use about 60% or less of each cache directory for the web cache. If you use any more than that you will begin to see a slight degradation in performance. Remember that cache size is not as important as cache speed, since for maximum effectiveness your cache needs only store about a weeks worth of traffic. You’ll also need to define the number of directories and subdirectories. The formula for deciding that is this:

x=Size of cache dir in KB (i.e. 6GB=~6,000,000KB) y=Average object size

(just use 13KB z=Number of directories per first level directory

`(((x / y) / 256) / 256) * 2 = # of directories

As an example, I use 6GB of each of my 13GB drives, so:

6,000,000 / 13 = 461538.5 / 256 = 1802.9 / 256 = 7 * 2 = 14

So my cache_dir line would look like this:

cache_dir 6000 14 256

LikeLike

Comment by Hussien — August 14, 2012 @ 9:19 AM

so mine would be :

#cache_dir aufs /1 1200000 2817 256

#cache_dir aufs /2 1200000 2817 256

#cache_dir aufs /3 1200000 2817 256

#cache_dir aufs /4 1200000 2817 256

#cache_dir aufs /5 1200000 2817 256

#cache_dir aufs /6 1200000 2817 256

but i got error and squid not start

FATAL: xcalloc: Unable to allocate 4112557923 blocks of 1 bytes!

LikeLike

Comment by Hussien — August 14, 2012 @ 9:20 AM

What squid version you have ?

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 17, 2012 @ 9:31 AM

I Have the exact same problem, it was working 100% with all 4 drives, but I made a boo boo and needed to reinstall the OS.

I Have 4 x 2 TB drives with each 1.7 TB available and 8 GB Ram. When I use only 3 drives, it works.

Squid conf:

cache_mem 6 GB

minimum_object_size 0 KB

maximum_object_size 128 MB

maximum_object_size_in_memory 128 KB

cache_swap_low 97

cache_swap_high 99

cache_dir aufs /cache1 1782211 38 256

cache_dir aufs /cache2 1782211 38 256

cache_dir aufs /cache3 1782211 38 256

cache_dir aufs /cache4 1782211 38 256

That will give you 1.6 TB allocated.

root@proxy:/# /usr/local/squid/sbin/squid -d1N -f /usr/local/squid/etc/squid.conf

Not currently OK to rewrite swap log.

storeDirWriteCleanLogs: Operation aborted.

Starting Squid Cache version 2.7.STABLE9 for x86_64-unknown-linux-gnu…

Process ID 4700

With 1048576 file descriptors available

Using epoll for the IO loop

Performing DNS Tests…

Successful DNS name lookup tests…

DNS Socket created at 0.0.0.0, port 32888, FD 6

Adding nameserver 127.0.0.1 from squid.conf

Adding nameserver **1.***.0.36 from squid.conf

Adding nameserver **1.***.0.37 from squid.conf

helperOpenServers: Starting 15 ‘storeurl.pl’ processes

logfileOpen: opening log /var/log/squid/access.log

Swap maxSize 7299936256 + 6291456 KB, estimated 562017516 objects

Target number of buckets: 28100875

Using 33554432 Store buckets

Max Mem size: 6291456 KB

Max Swap size: 7299936256 KB

FATAL: xcalloc: Unable to allocate 4109054858 blocks of 1 bytes!

Not currently OK to rewrite swap log.

storeDirWriteCleanLogs: Operation aborted.

Why can’t I use all 4 drives and what if I wanted to add drives?

LikeLike

Comment by A.J. Hart — November 17, 2013 @ 2:02 PM

sorry for many replies … i solved them

but about your way to cache youtube ..

root@CACHE:~# ls -lh /usr/local/www/nginx_cache/files

total 6.8M

-rw——- 1 www-data www-data 1.7M 2012-04-12 23:19 id=4a7d9b42ce959d72.itag=34

-rw——- 1 www-data www-data 1.7M 2012-04-14 23:08 id=bd651baa1f14e2fe.itag=34

-rw——- 1 www-data www-data 1.7M 2012-03-02 22:09 id=d32be70e6b010b70.itag=34

-rw——- 1 www-data www-data 1.7M 2012-04-17 17:41 id=e06969d12723e863.itag=34

i cant cache more than 1.7M for every video and then i got :

an error occured. please try again later.

LikeLike

Comment by Hussien — August 14, 2012 @ 10:43 AM

please tell me how you solved your cache_dir of 2TB?

LikeLike

Comment by operatorglobalnet — September 29, 2013 @ 2:31 PM

The ACL whcich is used to send YT request to nginx will only allow first segment of video to be cached. 240p is working because if we select 240p then &range tag will be removed and video will be requested as full file not range request.

LikeLike

Comment by Saqib — August 14, 2012 @ 1:47 PM

so how you cache 20M and 6M

LikeLike

Comment by Hussien — August 14, 2012 @ 4:13 PM

very nice, but needs some improvements even though i haven’t try the method as Pak Syed posting.

I hope there is some one or himself have spare time to make it better.

LikeLike

Comment by Ma'el — August 14, 2012 @ 5:11 PM

Sir kindly help my mikrotik cache hit queue is not giving more than 16mbps(2MB/sec). if configure browser to use proxy then it will getting 95-98 mbps on hit content.

name=”Unlimited speed for cache hits” parent=global-out

packet-mark=cache-hits limit-at=0 queue=default priority=8

max-limit=0 burst-limit=0 burst-threshold=0 burst-time=0s

LikeLike

Comment by saqib — August 14, 2012 @ 9:12 PM

hello sir `nice working but only 1.7 mb yt file cache why not combine ur previous storeurl.pl script and that new also thanks for ur lusa dynamic youtube cache for squid thanks a lot please finish ur project why we are looking for use our also project u r great

LikeLike

Comment by rajeevsamal — August 14, 2012 @ 11:10 PM

Mangling on DSCP(TOS) based slows down cache hit content to deliver at 17mbps(2.2MB/sec). I have check it on both MT v3.3 & v5.18.

Is it a bug or Mikrotik limitation. Sir Jahanzaib and all blog members kindly take a look into this matter. Thanks

LikeLike

Comment by saqib — August 15, 2012 @ 12:49 AM

I haven’t checked it yet, I will see into it.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 17, 2012 @ 9:29 AM

Hi Syed, please know this project, we are using and getting good results

http://sourceforge.net/projects/incomum/

Anything mail-me

LikeLike

Comment by int21 — August 15, 2012 @ 1:09 AM

Thanks for sharing. I will surely check it soon and if its good, I will write something about it too.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 15, 2012 @ 8:08 AM

hello syed.

please can you tell me why when i change cache_dir pointed to another hdd the squid stop working

i mounted it and sqiud make swap but after that squid not work and it saying

root@CACHE:~# /etc/init.d/squid3 restart

* Restarting Squid HTTP Proxy 3.x squid3 [ OK ]

LikeLike

Comment by Hussien — August 15, 2012 @ 10:11 AM

I implemented you Incomum solution on my test box but same issue Incomum caching only first 51 seconds of 360p videos. 240p are working fine. Thanks for sharing.

LikeLike

Comment by Saqib — August 15, 2012 @ 8:54 PM

It’s not a perfect solution, but it works in some aspects, for example, if your client have windows XP/2003, The cache works perfectly, On Windows 7, only 240p Works great.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 17, 2012 @ 9:24 AM

Your email address is bouncing mails.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 17, 2012 @ 9:28 AM

cquid cache only youtube cache not other site cache ??????????????

Youtube only 240 working

LikeLike

Comment by M.Tahir Shafiq — August 28, 2012 @ 7:16 PM

2012/08/15 08:43:47| Rebuilding storage in /c1 (DIRTY)

2012/08/15 08:44:36| Rebuilding storage in /c2 (DIRTY)

2012/08/15 08:45:24| Rebuilding storage in /c3 (DIRTY)

2012/08/15 08:46:12| Rebuilding storage in /c4 (DIRTY)

2012/08/15 08:47:00| Rebuilding storage in /c5 (DIRTY

LikeLike

Comment by Hussien — August 15, 2012 @ 10:49 AM

help please

LikeLike

Comment by Hussien — August 15, 2012 @ 1:04 PM

Squid is rebuilding the storage after an unclean shutdown of squid

(crash).

It means that the previous time you ran Squid you did not let it to

terminate in a clean manner, and Squid need to verify the consistency of

the cache a little harder while rebuilding the internal index of what is

cached.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 17, 2012 @ 9:27 AM

i try to uninstall then install it again and the same problem it didnt give dirty

LikeLike

Comment by Hussien — August 17, 2012 @ 8:14 PM

when i changed the hard disks it said CLEAN

why seagate hdd’s always DIRTY and western digital are CLEAN ???

i have more question .. can i cache iphone and android applications ??

thanks syed 🙂

LikeLike

Comment by Hussien — August 18, 2012 @ 9:49 PM

Try lowering down the cache DIR size.

Try starting with lower number, lets say start with 100 GB and then check, if its OK, then start increasing until it gives error.

Try changing the aufs to ufs

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 18, 2012 @ 10:04 PM

Nice sharing !

LikeLike

Comment by faizan — August 15, 2012 @ 3:02 PM

Thank you for sharing it,

I tried it but like other people reported. It caches for very few seconds then stops and video then becomes unplayable.

It would be great of there is a fix for it.

LikeLike

Comment by Badr — August 17, 2012 @ 11:27 AM

me too I interpreted the problem I run

and we need configure its networking components

LikeLike

Comment by abdallah — August 18, 2012 @ 4:06 PM

\sir plz mujhe btae ge k may ubuntu ki porani setting kaise khatam karo directory may masla kar raha ha

LikeLike

Comment by arsalan malick — August 18, 2012 @ 3:01 AM

sir .

ager youtube Cache ho rahi hai to downloads Video per PTCL Ka server Address kyou ata hai.. hai is ISP aka

http://o-o—preferred—par03g08—v4—nonxt5.c.youtube.com/

is per to Cache porxy server ka Address hona chahiye ?

LikeLike

Comment by Kashif Lari — August 18, 2012 @ 2:45 PM

salam,

this is rehmat ali gulwating have a request that i follow these steps but squid cant start error is 2012/08/16 01:32:09| WARNING: url_rewriter #5 (FD 10) exited

2012/08/16 01:32:09| WARNING: url_rewriter #4 (FD 9) exited

2012/08/16 01:32:09| WARNING: url_rewriter #3 (FD 8) exited

2012/08/16 01:32:09| Too few url_rewriter processes are running)

when i remove this lines from squid.conf

#url_rewrite_program /etc/nginx/nginx.rb

#url_rewrite_host_header off

squid starts all work fine but youtube cannot cache becoz of these lines

when i adding these lines

#url_rewrite_program /etc/nginx/nginx.rb

#url_rewrite_host_header off

squid giving error

2012/08/16 01:32:09| WARNING: url_rewriter #5 (FD 10) exited

2012/08/16 01:32:09| WARNING: url_rewriter #4 (FD 9) exited

2012/08/16 01:32:09| WARNING: url_rewriter #3 (FD 8) exited

2012/08/16 01:32:09| Too few url_rewriter processes are running)

please give me any guide to resolve this

thanking you,

LikeLike

Comment by rehmat ali — August 19, 2012 @ 1:42 AM

one more thing i have to inform you that i m using lusca/squid with nginx on centos and what is user www-data; in nginx.conf becoz i have no user created, except root.

i changed www-data; into user squid;

still same issue please resolved this as soon as possible.

thanking you,

LikeLike

Comment by rehmat ali — August 19, 2012 @ 7:21 AM

nginx error.log file error

2012/08/16 07:46:04 [error] 8286#0: *34 open() “/usr/share/nginx/html/squid-internal-periodic/store_digest” failed (2: No such file or directory), client: 127.0.0.1, server: localhost, request: “GET /squid-internal-periodic/store_digest HTTP/1.0”, host: “127.0.0.1”

LikeLike

Comment by rehmat ali — August 19, 2012 @ 7:51 AM

2012/08/16 08:03:30 [crit] 8538#0: *12 rename() “/home/www/nginx_cache/tmp/0000000005” to “/home/www/nginx_cache/files/id=09b3a2c99e648c4f.itag=34” failed (13: Permission denied) while reading upstream, client: 127.0.0.1, server: , request: “GET http://o-o—preferred—ptcl-khi1—v9—lscache8.c.youtube.com/videoplayback?algorithm=throttle

LikeLike

Comment by rehmat ali — August 19, 2012 @ 8:05 AM

actully in my squid.conf my user and group is squid should i change all typed (proxy) into (squid) in nginx.conf file ?

thanks

LikeLike

Comment by rehmat ali — August 19, 2012 @ 8:08 AM

if you are using ubuntu, then the user will be proxy.

If other then ubuntu like fedora, then stick with the SQUID user.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 20, 2012 @ 3:59 PM

I tried it but like other people reported. It caches for very few seconds then stops and video then becomes unplayable.

It would be great of there is a fix for it.

and we need configure its networking components

LikeLike

Comment by abdallah — August 20, 2012 @ 4:07 PM

wait . . .

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 22, 2012 @ 9:33 AM

I tried it but like other people reported. It caches for very few seconds then stops and video then becomes unplayable.

It would be great of there is a fix for it.

and we need configure its networking component

LikeLike

Comment by abdallah — August 20, 2012 @ 4:23 PM

salam

finally i found some errors when starting squid on centos with nginx method seems that ruby cant access by squid i tried and change permission of /usr/bin/env but nothing happend plzzz check access.log and correct my conf.thx

2012/08/19 09:34:35| Starting Squid Cache version LUSCA_HEAD-r14809 for i686-pc-linux-gnu…

2012/08/19 09:34:35| Process ID 9430

2012/08/19 09:34:35| NOTICE: Could not increase the number of filedescriptors

2012/08/19 09:34:35| With 1024 file descriptors available

2012/08/19 09:34:35| Using poll for the IO loop

2012/08/19 09:34:35| Performing DNS Tests…

2012/08/19 09:34:35| Successful DNS name lookup tests…

2012/08/19 09:34:35| Adding nameserver 10.0.5.1 from squid.conf

2012/08/19 09:34:35| DNS Socket created at 0.0.0.0, port 40182, FD 5

2012/08/19 09:34:35| Adding nameserver 203.99.163.240 from squid.conf

2012/08/19 09:34:35| helperOpenServers: Starting 5 ‘nginx.rb’ processes

/usr/bin/env: ruby1.8: Permission denied

/usr/bin/env: ruby1.8: Permission denied

/usr/bin/env: ruby1.8: Permission denied

/usr/bin/env: ruby1.8: Permission denied

/usr/bin/env: ruby1.8: Permission denied

2012/08/19 09:34:35| logfileOpen: opening log /var/log/squid/access.log

2012/08/19 09:34:35| logfileOpen: opening log /var/log/squid/access1.log

2012/08/19 09:34:35| logfileOpen: opening log /var/log/squid/access2.log

2012/08/19 09:34:35| logfileOpen: opening log /var/log/squid/access3.log

2012/08/19 09:34:35| logfileOpen: opening log /var/log/squid/access4.log

2012/08/19 09:34:35| Unlinkd pipe opened on FD 19

2012/08/19 09:34:35| Swap maxSize 1024000000 + 2097152 KB, estimated 78930550 objects

2012/08/19 09:34:35| Target number of buckets: 3946527

2012/08/19 09:34:35| Using 4194304 Store buckets

2012/08/19 09:34:35| Max Mem size: 2097152 KB

2012/08/19 09:34:35| Max Swap size: 1024000000 KB

2012/08/19 09:34:35| Local cache digest enabled; rebuild/rewrite every 3600/3600 sec

2012/08/19 09:34:35| logfileOpen: opening log /var/log/squid/store4.log

2012/08/19 09:34:35| AUFS: /home/cache1: log ‘/home/cache1/swap.state’ opened on FD 21

2012/08/19 09:34:35| AUFS: /home/cache1: tmp log /home/cache1/swap.state.new opened on FD 21

2012/08/19 09:34:35| Rebuilding storage in /home/cache1 (DIRTY)

2012/08/19 09:34:35| Using Least Load store dir selection

2012/08/19 09:34:35| Current Directory is /root

2012/08/19 09:34:36| ufs_rebuild: /home/cache1: rebuild type: REBUILD_DISK

2012/08/19 09:34:36| ufs_rebuild: /home/cache1: beginning rebuild from directory

2012/08/19 09:34:35| Loaded Icons.

2012/08/19 09:34:36| Accepting transparently proxied HTTP connections at 0.0.0.0, port 8080, FD 23.

2012/08/19 09:34:36| Configuring 127.0.0.1 Parent 127.0.0.1/8081/0

2012/08/19 09:34:36| Ready to serve requests.

2012/08/19 09:34:36| WARNING: url_rewriter #5 (FD 10) exited

2012/08/19 09:34:36| WARNING: url_rewriter #4 (FD 9) exited

2012/08/19 09:34:36| WARNING: url_rewriter #3 (FD 8) exited

2012/08/19 09:34:36| Too few url_rewriter processes are running

FATAL: The url_rewriter helpers are crashing too rapidly, need help!

Squid Cache (Version LUSCA_HEAD-r14809): Terminated abnormally.

CPU Usage: 0.041 seconds = 0.020 user + 0.021 sys

Maximum Resident Size: 21360 KB

Page faults with physical i/o: 0

Memory usage for squid via mallinfo():

total space in arena: 2412 KB

Ordinary blocks: 2352 KB 6 blks

Small blocks: 0 KB 0 blks

Holding blocks: 65208 KB 3 blks

Free Small blocks: 0 KB

Free Ordinary blocks: 59 KB

Total in use: 67560 KB 100%

Total free: 59 KB 0%

LikeLike

Comment by rehmat ali — August 22, 2012 @ 9:38 AM

OR paste a script 4 centos 4 for squid.conf, nginx.conf, nginx.rb…….thanks 4 ur reply

LikeLike

Comment by Rehmat ali — August 22, 2012 @ 11:59 AM

thanks mr.

I tried it but like other people reported. It caches for very few seconds then stops and video then becomes unplayable.

It would be great of there is a fix for it.

and we need configure its networking component

LikeLike

Comment by abdallah — August 23, 2012 @ 4:20 PM

Updated: 24th August, 2012: Caching Working OK. Check with the new config.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 24, 2012 @ 1:08 PM

[…] https://aacable.wordpress.com/2012/08/13/youtube-caching-with-squid-nginx/ […]

LikeLike

Pingback by Howto Cache Youtube with SQUID / LUSCA and bypass Cached Videos from Mikrotik Queue « Syed Jahanzaib Personnel Blog to Share Knowledge ! — August 24, 2012 @ 1:31 PM

[…] Youtube caching with Squid + Nginx […]

LikeLike

Pingback by Youtube Caching Problem : An error occured. Please try again later. [SOLVED] updated storeurl.pl « Syed Jahanzaib Personnel Blog to Share Knowledge ! — August 24, 2012 @ 1:32 PM

Awesome,

I tried it on Windows 7 and Chrome, then loaded the video with internet explorer 8 and it read it from cache without any problem.

Thanks,

LikeLike

Comment by Badr — August 24, 2012 @ 7:09 PM

Good to know.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 24, 2012 @ 9:25 PM

Thank you very much. My proxy work fine.

LikeLike

Comment by Maz Bhenks — August 24, 2012 @ 7:59 PM

Thanks Mr Syed Jahanzaib

i make all step and finally squid doesn’t work and get error

WARNING: url_rewriter #5 (FD 10) exited

WARNING: url_rewriter #4 (FD 9) exited

WARNING: url_rewriter #3 (FD 8) exited

Too few url_rewriter processes are running

FATAL: The url_rewriter helpers are crashing too rapidly, need help!

but when i remove this line from squid.conf

#url_rewrite_program /etc/nginx/nginx.rb

#url_rewrite_host_header off

it works but doesn’t cache YT plz help how to solve it , Thx

LikeLike

Comment by Squidy — August 25, 2012 @ 1:15 PM

Check your permissions.

chmod 777 -R /squidcache

touch /etc/nginx/nginx.rb

chmod 755 /etc/nginx/nginx.rb

nano /etc/nginx/nginx.rb

chown www-data /usr/local/www/nginx_cache/files/ -Rf

chown proxy:proxy /squidcache

LikeLike

Comment by Badr — August 25, 2012 @ 1:43 PM

Also if you have SELinux. Try disabling it.

Thanx

LikeLike

Comment by Badr — August 25, 2012 @ 1:47 PM

Most common reason of “FATAL: The url_rewriter helpers are crashing too rapidly, need help!” is copy pasting error in nginx.rb . It sometimes happens when pasting the code in wordpress blog. I will test it on monday and will let you know. I will post on pastebin, Also send me your email and I will send you the raw code.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 25, 2012 @ 4:30 PM

Thx all for Help Mr Badr and Mr Syed Jahanzaib , i remove Ubuntu and reinstall it now it’s works good with no error 🙂

LikeLike

Comment by SquidY — August 25, 2012 @ 10:07 PM

Great. Can you share your experience with some screenshots.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 26, 2012 @ 10:01 AM

if I dont use dnsmasq, how I configure the dns_nameservers & resolver in nginx.conf? in your conf, you use 221.132.112.8.

my proxy IP 192.168.0.1

jazaakallaahu khayra..

LikeLike

Comment by Mahdiy — August 27, 2012 @ 4:42 AM

one more question,

I have Lusca installed in my proxy. did I need to uninstall it?

LikeLike

Comment by Mahdiy — August 27, 2012 @ 7:07 AM

Lusca can work with NGINX too. its configuration is almost same as squid.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 27, 2012 @ 9:11 AM

use any dns server ip, its relatively not an issue.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 27, 2012 @ 9:12 AM

chown www-data /usr/local/www/nginx_cache/files/ -Rf

what is www-data, is this is a user? i have centos with lusca and i never crete any user named www-data so in which i should change www-data into squid or nginx…? plzzz clear this and my e-mail is big.bang.now@gmail.com plz send me nginx.rb raw code may be it will resolved by this.

LikeLike

Comment by Rehmat ali — August 28, 2012 @ 7:38 AM

in UBUNTU , www-data is a user/group set created specifically for web servers. It should be listed in /etc/passwd as a user, and can be configured to run as another user in /etc/apache2/apache2.conf.

Basically, it’s just a user with stripped permissions so if someone managed to find a security hole in one of your web applications they wouldn’t be able to do much. Without a lower-user like www-data set, apache2 would run as root, which would be a Bad Thing, since it would be able to do anything and everything to your system.

So for CENTOS, you may use, apache

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 28, 2012 @ 9:19 AM

Do you know why it doesnt work for 360p or 480p.

LikeLike

Comment by nav — August 28, 2012 @ 1:28 PM

I have tested it and its caching 360p perfectly (which is Default video quality for youtube player).

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 29, 2012 @ 12:42 PM

Do you know if it will work with Ipad. I dont think it uses range query

LikeLike

Comment by nav — August 30, 2012 @ 9:27 AM

when starting squid i have error look like ruby has some permission problem i tried to change permission of /usr/bin/env to squid, nginx, Apache but still same issue please resolve this or guide me to resolve this i m using centos with lusca. thxzzzzzz 4 ur reply,

2012/08/25 16:00:03| helperOpenServers: Starting 5 ‘nginx.rb’ processes

/usr/bin/env: ruby1.8: Permission denied

/usr/bin/env: ruby1.8: Permission denied

/usr/bin/env: ruby1.8: Permission denied

/usr/bin/env: ruby1.8: Permission denied

LikeLike

Comment by rehmat ali — August 28, 2012 @ 4:15 PM

Dear jahanzaib bhai i got this error when i try to enable url rewriter program.

m using Centos 5.5 kindly advise me how can i resolve this issue.

2012/08/29 10:44:10| Ready to serve requests.

2012/08/29 10:44:10| WARNING: url_rewriter #1 (FD 6) exited

2012/08/29 10:44:10| WARNING: url_rewriter #2 (FD 8) exited

2012/08/29 10:44:10| WARNING: url_rewriter #3 (FD 9) exited

2012/08/29 10:44:10| Too few url_rewriter processes are running

FATAL: The url_rewriter helpers are crashing too rapidly, need help!

LikeLike

Comment by owais — August 29, 2012 @ 10:58 AM

Dear jahanzaib bhai i got this error when i try to enable url rewriter program.

m using Centos 6 and fedora 14 with squid 3.1 and LUSCA kindly advise me how can i resolve this issue.

2012/08/29 10:44:10| Ready to serve requests.

2012/08/29 10:44:10| WARNING: url_rewriter #1 (FD 6) exited

2012/08/29 10:44:10| WARNING: url_rewriter #2 (FD 8) exited

2012/08/29 10:44:10| WARNING: url_rewriter #3 (FD 9) exited

2012/08/29 10:44:10| Too few url_rewriter processes are running

FATAL: The url_rewriter helpers are crashing too rapidly, need help!

LikeLike

Comment by owais — August 29, 2012 @ 10:59 AM

good working with me but full lan speed not working with mikrotik

LikeLike

Comment by rajeevsamal — August 29, 2012 @ 6:48 PM

videocacheview … check out this tool on google … you don’t need for all of that. 🙂 happy downloading

LikeLike

Comment by Anonymous — August 30, 2012 @ 3:54 AM

videocacheview is a client side tool only.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — August 30, 2012 @ 9:07 AM

Can someone please tell me how to install squid 2.7 its installing squid3

Tried apt-get install squid=2.7

LikeLike

Comment by nav — August 31, 2012 @ 7:25 AM

apt-get install squid

that install squid 2.7.

How i mangle the hits from NGINX in mikrotik for ZPH?

Someone know?

LikeLike

Comment by xbyt — August 31, 2012 @ 8:31 AM

apt-get install squid is installing squid3

LikeLike

Comment by nav — August 31, 2012 @ 10:50 AM

I have installed squid 2.7 from source as apt-get is installing squid3

However I get the following too

2012/08/31 16:28:17| WARNING: url_rewriter #1 (FD 7) exited

2012/08/31 16:28:17| WARNING: url_rewriter #3 (FD 9) exited

2012/08/31 16:28:17| WARNING: url_rewriter #2 (FD 8) exited

2012/08/31 16:28:17| Too few url_rewriter processes are running

2012/08/31 16:28:17| ALERT: setgid: (1) Operation not permitted

FATAL: The url_rewriter helpers are crashing too rapidly, need help!

LikeLike

Comment by nav — August 31, 2012 @ 11:31 AM

I am on Ubuntu

LikeLike

Comment by nav — August 31, 2012 @ 11:32 AM

execute:

sed -i “/^#/d;/^ *$/d” /etc/squid/squid.conf

and post your squid.conf here.

LikeLike

Comment by xbyt — August 31, 2012 @ 8:38 PM

Sorry had installed ubuntu 12. After installing ubuntu 10 its all working fine

LikeLike

Comment by nav — September 2, 2012 @ 5:36 AM

It can work with Ubuntu 12 too, But if it worked on 10 for you , I am glad it worked 🙂

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — September 3, 2012 @ 9:01 AM

‘re having problems with some computers on the network that are not doing the proxy server, which can be?

LikeLike

Comment by lucasffernandes — August 31, 2012 @ 10:53 PM

Sorry I was installing Ubuntu 12. With Ubuntu 10.4 everything worked fine however cannot access any website

cache.log says

/etc/nginx/nginx.rb: 10: require: not found

/etc/nginx/nginx.rb: 11: require: not found

/etc/nginx/nginx.rb: 13: class: not found

/etc/nginx/nginx.rb: 14: attr_accessor: not found

/etc/nginx/nginx.rb: 15: attr_reader: not found

/etc/nginx/nginx.rb: 17: Syntax error: “(” unexpected

/etc/nginx/nginx.rb: 10: require: not found

/etc/nginx/nginx.rb: 11: require: not found

/etc/nginx/nginx.rb: 13: class: not found

/etc/nginx/nginx.rb: 14: attr_accessor: not found

/etc/nginx/nginx.rb: 15: attr_reader: not found

.

.

.

2012/09/01 17:40:13| Configuring 127.0.0.1 Parent 127.0.0.1/8081/0

2012/09/01 17:40:13| Ready to serve requests.

2012/09/01 17:40:13| WARNING: url_rewriter #1 (FD 7) exited

2012/09/01 17:40:13| WARNING: url_rewriter #2 (FD 8) exited

2012/09/01 17:40:13| WARNING: url_rewriter #3 (FD 9) exited

2012/09/01 17:40:13| Too few url_rewriter processes are running

FATAL: The url_rewriter helpers are crashing too rapidly, need help!

Squid Cache (Version 2.7.STABLE7): Terminated abnormally.

LikeLike

Comment by nav — September 1, 2012 @ 12:49 PM

Sorry its working now there was a blank line at the top of nginx.rb file.. oops

LikeLike

Comment by nav — September 1, 2012 @ 12:59 PM

Assalam O alaikum Sir ubuntu ko Mikrotik ma kaise Connect karo Sir jaldi bata den Plzzz Or Sir mare pas 50 Claint ha r browsing bohat Selow han

LikeLike

Comment by Arsalan Malick — September 1, 2012 @ 4:34 PM

Salam

I have two question please :

1- can we multiple HDD’s for proxy_store ?

2- would the cached file be deleted by squid policy ?

LikeLike

Comment by sadook — September 2, 2012 @ 9:31 AM

1- No (but you can create RAID0 to utilize both hard drives space

There is a very nice article regarding this at

https://help.ubuntu.com/community/Installation/SoftwareRAID

2- No, nginx cache will not be removed by SQUID policy. Workaround is to create an bash script which will remove objects that have not been accessed in xxx days, lets say if the object is not accessed by user/web server, it may be deleted after one month.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — September 3, 2012 @ 8:57 AM

Salam

is it possible to use more than 1 HDD for caching video with Nginx ?

LikeLike

Comment by sadook — September 3, 2012 @ 8:14 AM

I guess not.

However if your hardware support, configure your harddisk in RAID0, this way you can combine two harddisk to function as one. There is a very nice article regarding this at

https://help.ubuntu.com/community/Installation/SoftwareRAID

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — September 3, 2012 @ 8:40 AM

I have found that using an Ipad to access youtube NGINX does not cache. In the log the return code is 206 and not 200 as with viewing from browser. Is there a work around for that

LikeLike

Comment by nav — September 4, 2012 @ 3:18 PM

Assalaamu Alaikum Syed Jahanzaib bhai aapnay youtube cache server ka jo project rakha howa hay wo menay kar lihay kaam bhi kar raha hay ALLAH aap ko kush rakhay salama rakhay per ek masla aa raha hay aghar main client ban kar use karta hoon to mujhay proxy lagani parti hay jo insterner explorer main lagai jati hay address main phiar port dena perta hay main chahta hoon auto detect chal jahay proxy internet explorer main use na karni paray is ka plz mujhay tareeqa batain

LikeLike

Comment by abdulsami — September 5, 2012 @ 4:00 AM

You have to configure your proxy in transparent mode, also by using iptables , you can automatically redirect user request to squid port 8080.

Use the guide.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — September 5, 2012 @ 11:09 AM

salam,

rehmat here jahanzaib sir please post a script for youtube caching squid with nginx for centos becoz i tried ur ubuntu script and it cant start squid giving me error

2012/08/31 16:28:17| WARNING: url_rewriter #1 (FD 7) exited

2012/08/31 16:28:17| WARNING: url_rewriter #3 (FD 9) exited

2012/08/31 16:28:17| WARNING: url_rewriter #2 (FD 8) exited

2012/08/31 16:28:17| Too few url_rewriter processes are running

2012/08/31 16:28:17| ALERT: setgid: (1) Operation not permitted

FATAL: The url_rewriter helpers are crashing too rapidly,

please post a script 4 centos

LikeLike

Comment by Rehmat ali — September 6, 2012 @ 6:13 AM

Is there a way to get windows update with this script ??

Best regards.

LikeLike

Comment by int21 — September 6, 2012 @ 7:08 PM

Dear Syed,

I got it working like the tutorial, but can you advise how to use the ZPH feature for youtube video hits? It seems the video which are HIT are not recognized by squid and therefore shows TCP_MISS in the access log. As a result, the ZPH feature won’t work. I believe the ruby scripts needs some improvement which will be able to tell the squid that a particular video is a hit or not. And hence squid can mark the TOS while delivering to the client.

Any suggestion are welcomed.

Regards,

Saiful

LikeLike

Comment by Saiful Alam — September 7, 2012 @ 7:51 PM

I guess ZPH will not work with this approach as squid is not delivering the contens, nginx is, I will do some search on it once I get some free time.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — September 9, 2012 @ 9:38 PM

thanks, and that’s working, … waiting good news for zph problems 🙂

LikeLike

Comment by NGERI — September 13, 2012 @ 8:08 AM

Restarting nginx: [emerg]: bind() to 0.0.0.0:80 failed (98: Address already in use)

[emerg]: bind() to 0.0.0.0:80 failed (98: Address already in use)

[emerg]: bind() to 0.0.0.0:80 failed (98: Address already in use)

[emerg]: bind() to 0.0.0.0:80 failed (98: Address already in use)

[emerg]: bind() to 0.0.0.0:80 failed (98: Address already in use)

[emerg]: still could not bind()

nginx.

LikeLike

Comment by hendri harmoko — September 9, 2012 @ 9:33 PM

It shows that you already have some web server installed e.g Apache,

check it and remove it first before configuring nginx.

OR you can configure nginx to use another port , other then 80.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — September 9, 2012 @ 9:42 PM

hi help

LikeLike

Comment by Arman Ahmed — April 22, 2016 @ 11:33 PM

dear janzaib

i have to inform u that i have storeurl.pl patren to cache youtube and it only cache 51 sec of video segment but after adding ( set quick_abort_min = 1MB ) and ( set the range_offset_limit = 10 MB ) when i download any video of youtube once with idm and re-download from other pc with idm it provide full video from cache so i think storeurl.pl script had some thing missing to provide full video cache in flash player. if i m wrong then please correct me. thanks

LikeLike

Comment by Rehmat ali — September 11, 2012 @ 1:42 AM

First of all youtube cache perfectly ho rhi hy (as standalone server) I have an issue, esi server ko jub MT HotSpot server ky sath attch karta hu to clients ko es ka cached contant provide ho jata hy. but jub same unhi 3 nat, mangle aur queue rules ky sath MT PPPoE server ky sath attach karta hu to es server py ping hi nhe hoti. client py browsing band ho jati hy. kindly mujy guide kar dain aur agr MT ya squid main kuch changes required hain (rule add karna ho) to kindly mujy wo rules bhi yaha share kar dain, aur bata dain ky kaha ap ky given rules add karon. thanx a lot……

LikeLike

Comment by Muhammad Abdullah Butt — September 11, 2012 @ 9:28 AM

Muhammad Abdullah Butt kiya aap niginx pattren follow ker rahey hien youtube caching k liye…?

LikeLike

Comment by rehmat ali — September 11, 2012 @ 6:37 PM

i did yout tutorial and i have squid box caching every files extensions and now i add your codes

the video play normal but not cached because it still getting the video from youtube not from my cache

but look here please

root@LOULOU-CACHE:~# ls -lh /usr/local/www/nginx_cache/files

total 10M

-rw——- 1 www-data www-data 1.7M 2011-06-23 12:33 id=65a39536a03b9410.itag=34.range=13-1781759.algo=throttle-factor

-rw——- 1 www-data www-data 1.7M 2011-06-23 12:33 id=65a39536a03b9410.itag=34.range=1781760-3563519.algo=throttle-factor

-rw——- 1 www-data www-data 1.4M 2011-06-23 12:33 id=65a39536a03b9410.itag=34.range=3563520-5345279.algo=throttle-factor

-rw——- 1 www-data www-data 1.7M 2009-12-22 04:28 id=d480e0e187974607.itag=34.range=13-1781759.algo=throttle-factor

-rw——- 1 www-data www-data 1.7M 2009-12-22 04:28 id=d480e0e187974607.itag=34.range=1781760-3563519.algo=throttle-factor

-rw——- 1 www-data www-data 1.7M 2009-12-22 04:28 id=d480e0e187974607.itag=34.range=3563520-5345279.algo=throttle-factor

-rw——- 1 www-data www-data 135K 2009-12-22 04:28 id=d480e0e187974607.itag=34.range=5345280-7127039.algo=throttle-factor

so what is my problem ???? please help brother and thank you alot

LikeLike

Comment by Hussien — September 20, 2012 @ 6:28 AM

hello please replay ??

LikeLike

Comment by Hussien — September 21, 2012 @ 3:34 AM

its work fine thank you …. how we can cache other video site’s ???

LikeLike

Comment by Hussien — September 21, 2012 @ 11:12 PM

i have a big problem with watching youtube video , not caching it , now i don’t want to cache it , only watching is a problem ..

I tried 3 different squid servers , same issue , the youtube plays the 1st segment only , then it keeps waiting forever !!!!

without squid there is no problem at all , I know youtube divided file to segments but how could you guys play youtube videos normally using squid ??

forget caching i just need to play the whole video file directly from youtube …

LikeLike

Comment by samsoft — September 22, 2012 @ 6:24 AM

salam Syed bhai kaisy hain aap.. Syed bhai squid k saath aik problem aa rahi hai jo k dosary doston k saath b same problem hai k squid main IDM ki caching krain to video cach nahin hoti aur agar video cach krain to IDM nahin cache hoti,, aik waqt main aik cheez cach hoti hai is ka solution btain kindly… aur 2sari yeh baat k squid k saath client end per mozilla se facebook per login nahin hota koi b ID agar login krain to server not found ka error ata hai lekin IE per sahi kaam krta hai squid. mozilla per kuch sites ko open nahin krta.. Kindaly in 2 queries ka solution dain .. Thanks…

LikeLike

Comment by Fahad — September 24, 2012 @ 11:31 PM

I will not recommend you to do IDM caching I am sure you are using range_offset_limit dircetive in squid, if YES, then stop using it, it can give you negative impact regarding un necessary WAN link utilization.

Look at https://aacable.wordpress.com/2012/08/24/squid-dont-cache-idm-downloads/

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — September 29, 2012 @ 4:24 PM

and what about facebook problem “server not found” in mozilla? i think fb not using port 8080…? solution plz?

LikeLike

Comment by Fahad — October 6, 2012 @ 11:32 PM

What is your network scenario and the problem you are facing?

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — October 7, 2012 @ 2:36 PM

Some additional info. Using squid 3 on Ubuntu 12.04, I had to make the following changes to your squid.conf to get it to work (bits snipped out of the diff, – are removed lines, + are added lines):

# This directive was removed

-server_http11 on

# The log location is now /var/log/squid3

-cache_access_log /var/log/squid/access.log

-cache_log /var/log/squid/cache.log

-cache_store_log /var/log/squid/store.log

+cache_access_log /var/log/squid3/access.log

+cache_log /var/log/squid3/cache.log

+cache_store_log /var/log/squid3/store.log

# This is no longer supported

-acl all src 0.0.0.0/0.0.0.0

# This fixes a warning that suggests using CIDR notation

-acl localhost src 127.0.0.1/255.255.255.255

+acl localhost src 127.0.0.1/32

# This is no longer supported

-broken_vary_encoding allow apache

I still don’t have this 100% working, but it’s serving content.

LikeLike

Comment by Ted Mielczarek (@TedMielczarek) — October 3, 2012 @ 7:48 PM

Thank you for sharing your experience and updated information.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — October 5, 2012 @ 8:19 AM

hola amigos … tengo ubutnu con squid3 … pregunto.. solo hay que modificar..esas lineas del squid.conf?… o tambien hay q hacer algo en el nginx.rb y en nginx.conf ? la verdad no me funciona se ejecutan bien pero no hace el cache de los videos, .. y los video de youtube.. no los carga.. ahora si cargo otro video que no sea de youtube si carga pero no me hace cache

hello friends … I have squid3 ubutnu with … wonder .. you just have to modify these lines of squid.conf ..? … or you are doing something in q and nginx.rb nginx.conf? I do not really run well but work does not cache videos .. and youtube video .. not load .. Now if I load another video on youtube than if I load but not caches

LikeLike

Comment by jhon freddy caldas — January 11, 2013 @ 2:41 AM

hello please i need help in how can i make youtube videos be out of the queue limit in mikrotik please i need help

LikeLike

Comment by ali nassar — October 6, 2012 @ 3:54 PM

Just mark the packets for you tube destination ip’s and then create queue to allow unlimited or desired bandwidth to these marked packets. You can use the script that can mark ips for youtube.com. search wiki and forum, there are lot of examples out there.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — October 7, 2012 @ 2:37 PM

Hello Sir,

I have been running your setup on my company’s network and is working well except I have noticed some issue that I can’t get a fix for.

I have noticed that nginx is hogging my network even when users are not watching youtube. even after setting these directive as follows (quick_abort_min to 0 and quick_abort_max to 0) I have noticed that nginx keeps hogging my network bandwidth.

I used the following command to show ports that are hogging my network

sudo iftop -P

and the following command to show which process the connection belongs to

sudo netstat -tpn | grep #port number

I found huge number of ports and most of them belong to nginx process.

Any ideas?

Thank you,

LikeLike

Comment by Badr — October 16, 2012 @ 4:20 PM

I have not faced such issue, I implemented nginx base youtube caching method at a friends network and since long its working great for caching youtube videos near perfect. Also it stops downloading the video if the user stop or abort the video. as youtube have changed there method of delivering videos in chunks (1.7Mb each) so its become easier and manageable for cache admins)

There must be some squid configuration mistake.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — October 17, 2012 @ 8:47 AM

I am temporary solving the problem now by using an automated script that restarts nginx every certain time. I will do more in-dept check and comeback later.

Thanks,

LikeLike

Comment by Badr — October 18, 2012 @ 2:25 AM

I believe the problem seems to have gone when I changed this option in nginx.conf keepalive_timeout to 1.

LikeLike

Comment by Badr — October 24, 2012 @ 5:11 PM

Hi all.

As long as the cache part of video its been transfer to client, squid stop responding. The moment the transfer ends, squid reply again.

Bests.

LikeLike

Comment by Mauricio — October 24, 2012 @ 11:28 AM

dear sir

want to know what maximam size harddisk can be using i can use more 10TB

LikeLike

Comment by yonis — October 20, 2012 @ 10:02 PM

I guess you can.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — October 22, 2012 @ 10:34 AM

and can i use ubuntu 12 with squid 3 or ubuntu 12 with squid 2.7 better and there is standerd server can buy to caching youtube or any movie or only this way and about detail’s server if i want make server have maximam size for data can you give me the detail’s for server pls

LikeLike

Comment by yonis — October 20, 2012 @ 10:05 PM

Hello,

very good this solution works well with Ubuntu 12.04 with squid3 and XP clients.

Question : do you think is possible to deport nginx on another server to devide charge between to servers ?

LikeLike

Comment by guidtz — October 23, 2012 @ 7:22 PM

[…] tulisan ini sebetulnya hanya copy paste dari blog aacable. […]

LikeLike

Pingback by cara cache video youtube dengan squid + nginx di ubuntu | blognya mattnux — October 24, 2012 @ 1:03 PM

In Centos .. you should edit ruby.rb at lines 1 like this

#!/usr/bin/env ruby1.8 to #!/usr/bin/env ruby

after that .. run your Squid / Lusca again

– Furqon

LikeLike

Comment by Furqon . SY — October 26, 2012 @ 1:54 AM

This seems to be working well for me, but only for 360p and 480p video. i see videos with filenames containing itag=34 and itag=35

But when I watch a 720p or 1080p video, no files are saved in the nginx folder.

Does the system not work with 720p or 1080p videos?

LikeLike

Comment by Jeff Jakubowski — October 29, 2012 @ 8:52 PM

I have note tested it for 720p or 1080p. I will try it.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — October 30, 2012 @ 11:33 AM

Syed, i would like to cache only youtube. But i dont know if making the squid conf is enough.

Can you please tell me..?

Thanx

LikeLike

Comment by Mauricio — October 31, 2012 @ 12:31 AM

Does it have to be transparent?

For testing purposes, I’ve just got my web browser configured to use the caching server as a forward proxy, on port 8080.

I’m having a really big problem being able to figure out if it’s actually working properly.

I see videos in the /usr/local/www/nginx_cache/files folder, yet if I watch the same video after clearing my browser cache, it doesn’t seem to play back properly.

LikeLike

Comment by Jeff Jakubowski — October 31, 2012 @ 8:57 PM

Hello Sayed.

Is nginx method still working. because mine is not working anymore. it says an error occurred on the video.

I have checked nginx error log file and it says this : ” *6 tc.v5.cache1.c.youtube.com could not be resolved( 3: Host not found), client: 127.0.0.1, server: , request ….”

Thanks,

LikeLike

Comment by Badr — November 10, 2012 @ 5:14 PM

Never mind. I solved the problem.

The nginx.conf has to be modified now.

The line in the location@proxy_youtube section:

I put “resolver 8.8.8.8” instead of the old IP address.

LikeLike

Comment by Badr — November 10, 2012 @ 5:21 PM

Good to hear that.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — November 12, 2012 @ 10:25 AM

hotmail cant sign in 3 days ago nothing change to any config file. plzz help

LikeLike

Comment by rehmat ali — November 11, 2012 @ 8:57 PM

Try Following.

# Clear cache and restart

# Change DNS Server IP to some other Public DNS Server

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — November 12, 2012 @ 10:23 AM

WHAT CACHE ? dns cache or squid cache plz mention this and also plz paste command also if needed.

LikeLike

Comment by rehmat ali — November 12, 2012 @ 11:26 PM

Hello,

there is problem with html5 (video/webm) I think this could be because of new HTTP Status(206 Partial Content and Range Requests). Videos are loaded by proxy, it is possible to watch it but nginx just don’t save to cache downloaded files from tmp.

Any sugestion how to solve this problem?

LikeLike

Comment by 4rch0n — November 22, 2012 @ 8:33 PM

I stopped working on this project long time ago. at last time I remember that html5/webm was not getting in cache.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — November 22, 2012 @ 9:50 PM

sir;

How to flush (clear) cache ?

LikeLike

Comment by muhammad magdy — November 23, 2012 @ 10:33 PM

Dear Jahanzaid,

I use squid with tproxy (divert) feature and YT does not show video. If I do mangle -F (clear tproxy settings), it works fine. If I use port 3128 from a browser it works fine, too. If I use tproxy everything works but there is no video. Please help.

LikeLike

Comment by Tproxy_user — November 27, 2012 @ 6:13 AM

I have not used TPROXY Yet, so no idea 🙂

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — November 27, 2012 @ 12:14 PM

If I connect through a proxy in a browser I see logs in nginx/access.log. WHen I use tproxy i have nginx.rb working in syslog but do not have anything in nginx/access.log. Any ideas? I changed nginx.conf to “listen 8081” instead of “listen 127.0.0.1:8081”. I think the point is in the ip the request is coming from. In the case of pure proxy it is the external address of the proxy. In the case of tproxy it is a client’s address.

LikeLike

Comment by Tproxy_user — November 27, 2012 @ 12:53 PM

I solved this problem by nat-ing google IP’s instead of tproxy interception. Kind of workaround.

Dear Jahanzaib, what is the procedure of cleaning up nginx’s files? As far as I see the rb procedure only stores them. Is there a script that deletes files not in use for some time?

LikeLike

Comment by Tproxy_user — November 28, 2012 @ 5:19 AM

Actually there is no builtin method to clean the files stored in nginx. But you can create a script that can check for files that have not been access from last xx days and delete them accordingly and schedule this script to run daily at night. I used this method in windows to remove our SAP Logs that are older then 10 days to save space. in linux, its much easier and customizable to do this.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — November 28, 2012 @ 10:22 AM

Syed, I’m running lusca + imComum and is running ok, I guess you not follow the cookbook.

Here I’m using Lusca compiled with “–enable-viollations”. Take a glimpse.

Best Regards.

LikeLike

Comment by int21 — November 28, 2012 @ 6:44 PM

Dear Jahanzaib,

Are you saying that nginx does not have a database of the files stored in it’s directory and looks into it everytime a request comes in? In this case there should be a definite number of files after which it becomes too slow.

LikeLike

Comment by Tproxy_user — November 29, 2012 @ 4:52 AM

It donot have its OWN DATABASE. it will store the file using the following syntax:

root /usr/local/www/nginx_cache/files;

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — November 29, 2012 @ 3:13 PM

(schedule this script to run daily at night.)

So how to do this script? to reduce cache size on hard drive

LikeLike

Comment by muhammad magdy — December 3, 2012 @ 12:10 AM

#!/usr/bin/perl

@result = qx{df};

foreach $elem (@result) {

if ($elem =~ /dev\/md0.+ (.\d)\%.+/)

{

if ($1 > 95)

{

exec (“cd /usr/local/www/nginx_cache/tmp/ && ls -u | tail –lines=1000 | xargs $

}

}

}

this will delete 1000 last _accessed_ files from nginx files directory if the used space on disk dev/md0 exceeds 95%. Run it as frequent as you wish.

LikeLike

Comment by Tproxy_user — December 3, 2012 @ 4:42 AM

great! thank you 🙂 for sharing.

May I put this in the Article ?

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — December 3, 2012 @ 8:26 AM

where should i put this script?

LikeLike

Comment by Ma'el — December 25, 2012 @ 8:23 AM

Please do.

Of course the script needs to be adapted with the right values. 1000 files equals to 1.7Gb. If you run it every 10 minutes you should have youtube videos received for 10 minutes account for less than 1.7Gb.

LikeLike

Comment by Tproxy_user — December 3, 2012 @ 9:46 AM

thanks guys for sharing knowledge

LikeLike

Comment by muhammad magdy — December 4, 2012 @ 12:47 AM

“an error occurred please try again later” youtube video

what can i do?

LikeLike

Comment by muhammad magdy — December 5, 2012 @ 8:56 PM

Salam,

i’m very interested to make videocache for my office …and it’s supported with freebsd 8.3

LikeLike

Comment by Nazir — December 6, 2012 @ 6:09 AM

Try this

http://code.google.com/p/yt-cache/wiki/Installation?ts=1326475991&updated=Installation

I installed it already and tested – pretty good job, file indexes stored in mysql, full web GUI and forth.

LikeLike

Comment by Tproxy_user — December 14, 2012 @ 4:23 AM

My ubuntu server can enter facebook , can login ym , msn and gmail. but why my client cant even enter facebook website , cant login ym . msn and gmil ? help me .. salam

LikeLike

Comment by faizrazak — December 21, 2012 @ 11:52 AM

[…] https://aacable.wordpress.com/2012/08/13/youtube-caching-with-squid-nginx/ […]

LikeLike

Pingback by Youtube caching with SQUID 2.7 [using storeurl.pl] « Syed Jahanzaib Personnel Blog to Share Knowledge ! — December 21, 2012 @ 2:02 PM

sir, thanks for the squid configuration everything work fine and also the you tube caching but when i enable the nginex then the cache will consume all the bandwidth the the net will be down so i need to stop it agian.what i can do?

LikeLike

Comment by Mohammad — December 24, 2012 @ 6:32 PM

sir, thanks for the squid configuration everything work fine and also the you tube caching but when i enable the nginex then the cache will consume all the bandwidth the the net will be down so i need to stop it agian.

what i can do?

LikeLike

Comment by Mohammad — December 24, 2012 @ 6:39 PM

I agree. My experience it will keep downloading when the client changes video quality during playback.

LikeLike

Comment by Badr — December 27, 2012 @ 1:18 PM

OK.But brother from your experiance is there a solution i meen some rules put it.

LikeLike

Comment by Mohammad — December 27, 2012 @ 3:26 PM

update ubuntu :# apt-get update

no using apt-get install update

LikeLike

Comment by kidx13 — December 27, 2012 @ 9:18 PM

hi please to confirm if the yt-nginx-squid is WORKING under windows 7 , it is working well under windows-xp .

LikeLike

Comment by rik — December 30, 2012 @ 3:31 PM

Youtube is banned in our country since long, therefore can’t confirm if this method still working or not.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — December 30, 2012 @ 9:30 PM

YouTube banned !!? never mind, you could lease a server from a hosting site and setup a vpn server, make a tunnel and pass YouTube IPs from that vpn server.

LikeLike

Comment by rik — December 31, 2012 @ 8:41 PM

sorry, you can ignore my last post and remove it, when something is banned in Pakistan then its really banned

LikeLike

Comment by rik — December 31, 2012 @ 8:47 PM

Syed, i use the nginx with the squid and i follow all the steps and it caching everythings fine with the youtube, but my nginx squid server take all the bandwidth then i need to stop the nginx, sir is there a command put it that when i closed the youtube then the session will closed because it is a big problem please help me.

LikeLike

Comment by mohammad — January 6, 2013 @ 7:10 PM

Dear Seyd

i have lusca cache with 3 hard disk 500 gb

cache_dir aufs /cache-1/ 400000 939 256

cache_dir aufs /cache-2/ 400000 939 256

cache_dir aufs /cache-3/ 400000 939 256

i used your formula but my kusca it slow

can you help me

thank you salaf

LikeLike

Comment by moustafa halawani — January 8, 2013 @ 1:57 AM

* LUSCA

LikeLike

Comment by moustafa halawani — January 8, 2013 @ 1:58 AM

There could be many reasons for slow browsing. post your complete hardware specs. Internet connection detail and active users load and the bandwidth packages.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — January 9, 2013 @ 8:42 AM

ok sir but the formula its true ?? and what is ausfs and ufs ? thank sir

LikeLike

Comment by moustafa halawani — January 12, 2013 @ 8:21 AM

Asslam O aalikum Sir kiya youtube k liye alag cache server or website cache k liye alag server bana sakte hain????

LikeLike

Comment by Arsalan Malick — January 8, 2013 @ 4:03 PM

Yes you can. but usually not required in normal situation.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — January 9, 2013 @ 8:41 AM

Asslam i install ubuntu 10.04 on my 3TB hard disk and i dont do any partition , where is the setting on squid.conf or storeurl to make my cache data store longger on my 3TB hard disk although 1 youtube video not watch for more than 4 -8 month, because i do squid cache server for more than 50 user on my office, so i want my cache use fully my 3TB hard disk then after more than 95% cache are full it will automaticly delete the old cache

LikeLike

Comment by faizrazak — January 9, 2013 @ 7:39 PM

hello folks and assalamualaikum wr.wb :), i have a problem in “env: ruby1.8: No such file or directory”

and i

+ /usr/local/bin/ruby

in squid conf

url_rewrite_program /usr/local/bin/ruby /usr/local/etc/squid/nginx.rb

and if i “ps ax | grep squid” i found ruby and perl 🙂

gotroot# ps ax | grep squid

8431 ?? Is 0:00.00 /usr/sbin/squid -D

8433 ?? I 0:08.73 (squid) -D (squid)

8540 ?? Is 0:00.06 (ruby) /usr/local/etc/squid/nginx.rb (ruby)

8541 ?? Is 0:00.04 (ruby) /usr/local/etc/squid/nginx.rb (ruby)

8542 ?? Is 0:00.05 (ruby) /usr/local/etc/squid/nginx.rb (ruby)

8543 ?? Is 0:00.05 (ruby) /usr/local/etc/squid/nginx.rb (ruby)

8544 ?? Is 0:00.07 (ruby) /usr/local/etc/squid/nginx.rb (ruby)

8545 ?? Is 0:00.04 /usr/bin/perl /usr/local/etc/squid/lala.pl (perl5.12.

8546 ?? Is 0:00.02 /usr/bin/perl /usr/local/etc/squid/lala.pl (perl5.12.

8547 ?? Is 0:00.03 /usr/bin/perl /usr/local/etc/squid/lala.pl (perl5.12.

8548 ?? Is 0:00.03 /usr/bin/perl /usr/local/etc/squid/lala.pl (perl5.12.

8549 ?? Is 0:00.04 /usr/bin/perl /usr/local/etc/squid/lala.pl (perl5.12.

8550 ?? Is 0:00.03 /usr/bin/perl /usr/local/etc/squid/lala.pl (perl5.12.

8551 ?? Is 0:00.02 /usr/bin/perl /usr/local/etc/squid/lala.pl (perl5.12.

8537 1 I+ 0:00.00 tail -f /var/log/squid/cache.log

my box is freebsd 9.0

and if you get the erorr like this “unknown directive “gzip_static”

you can recompile the nginx (stable version) wiht option

./configure –with-pcre –with-file-aio –with-http_gzip_static_module –with-http_stub_status_module –prefix=/usr –conf-path=/etc/nginx/nginx.conf –pid-path=/var/run/nginx.pid –error-log-path=/var/log/nginx/error.log && make && make install

i hope this can helpfull

LikeLike

Comment by denzfarid — January 23, 2013 @ 11:31 PM

install RUBY. Its described in the article.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — January 24, 2013 @ 8:45 AM

do you have any way to cache android and iphone app’s

now we are in smart phone world

thank you

LikeLike

Comment by Hussein — January 24, 2013 @ 6:06 AM

I have not done testing on YouTube caching since long because youtube is banned in our country.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — January 24, 2013 @ 8:43 AM

dear syed jahanzaib, salam alaikom

am not asking you about youtube ,

iam asking about android and iphone applications ..

thank you ..

LikeLike

Comment by Hussein — January 25, 2013 @ 2:36 AM

??????

LikeLike

Comment by Hussein — January 27, 2013 @ 7:59 PM

Assalamualaikum Wr. Wb. thank you for this tutorial, squid cache + nginx + ruby was able to run on my server.

however, the bandwidth can not be limited when opening youtube video first

(delay parameters squid.conf does not work)

why? and what’s the solution?

because before I installed nginx and ruby delay parameters to restrict streaming youtube.

LikeLike

Comment by unyil-indonesia — January 24, 2013 @ 10:18 PM

Squid does not download the video, nginx download it. So squid elay parameters cannot be applied to youtube download.

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — January 26, 2013 @ 3:22 PM

hello friend you do not know me I’m from Argentina, I’m trying your tutorial days ago but I do it in debian 6.0.6 and whenever I have a problem, I do in Ubuntu 10.10 as putty does not work I can not copy and paste, I am using Oracle VM. pede virtualbox.Cual be the error in debian? can you give me advice regarding my problem? many Thanks

LikeLike

Comment by leandroz — January 25, 2013 @ 12:16 AM

you can isntall openssh server to enable ssh login via putty. to install ssh seerver on ubuntu, use the following command

sudo apt-get install openssh-server

LikeLike

Comment by Syed Jahanzaib / Pinochio~:) — January 26, 2013 @ 3:20 PM

this is what I said when I throw it to work, where I’m wrong?

squid -z

2013/01/25 00:00:14| Squid is already running! Process ID 2303

root@proxy:/home/niuncredo# service squid start

Starting Squid HTTP proxy: squidCreating squid cache structure … (warning).

2013/01/25 00:00:25| Squid is already running! Process ID 2303

failed!

root@proxy:/home/niuncredo# service nginx restart

Restarting nginx: nginx.

LikeLike

Comment by leandroz — January 25, 2013 @ 8:04 AM

changed to Ubuntu 10.04 with VMware Workstation now I long another mistake? where I am going wrong? the answer will be very grateful! regards

squid -z

FATAL: Unable to open configuration file: /etc/squid/squid.conf: (13) Permission denied

Squid Cache (Version 2.7.STABLE7): Terminated abnormally.